Cuda Matrix Multiplication Size

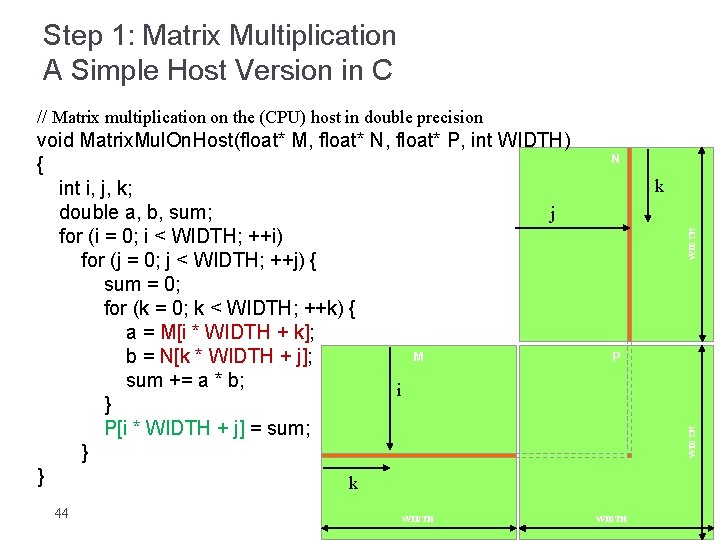

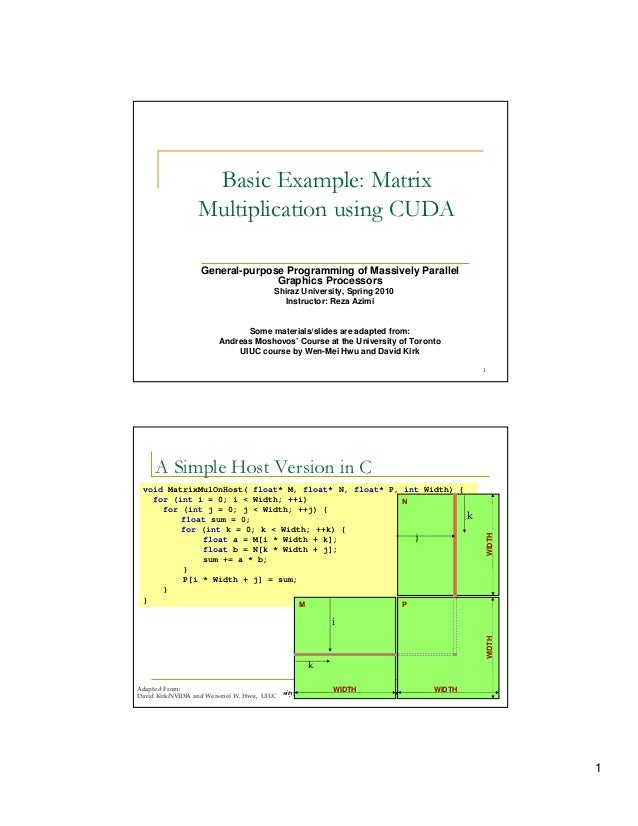

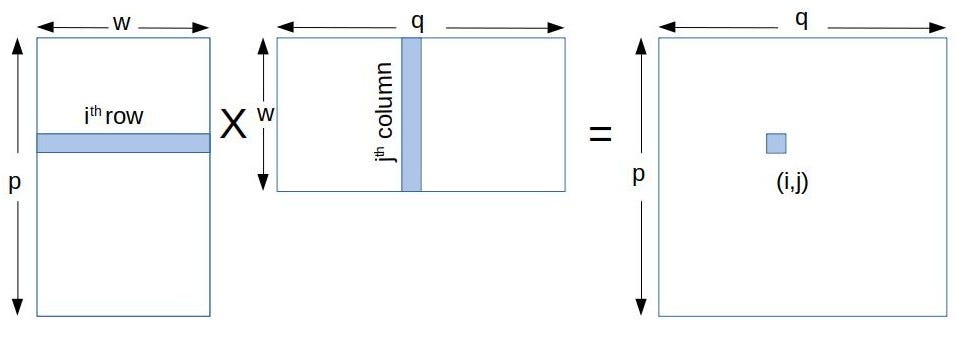

Int ty threadIdx. To calculate ij th element in C we need to multiply i th row of A with j th column in B Fig1.

5kk73 Gpu Assignment Website 2014 2015

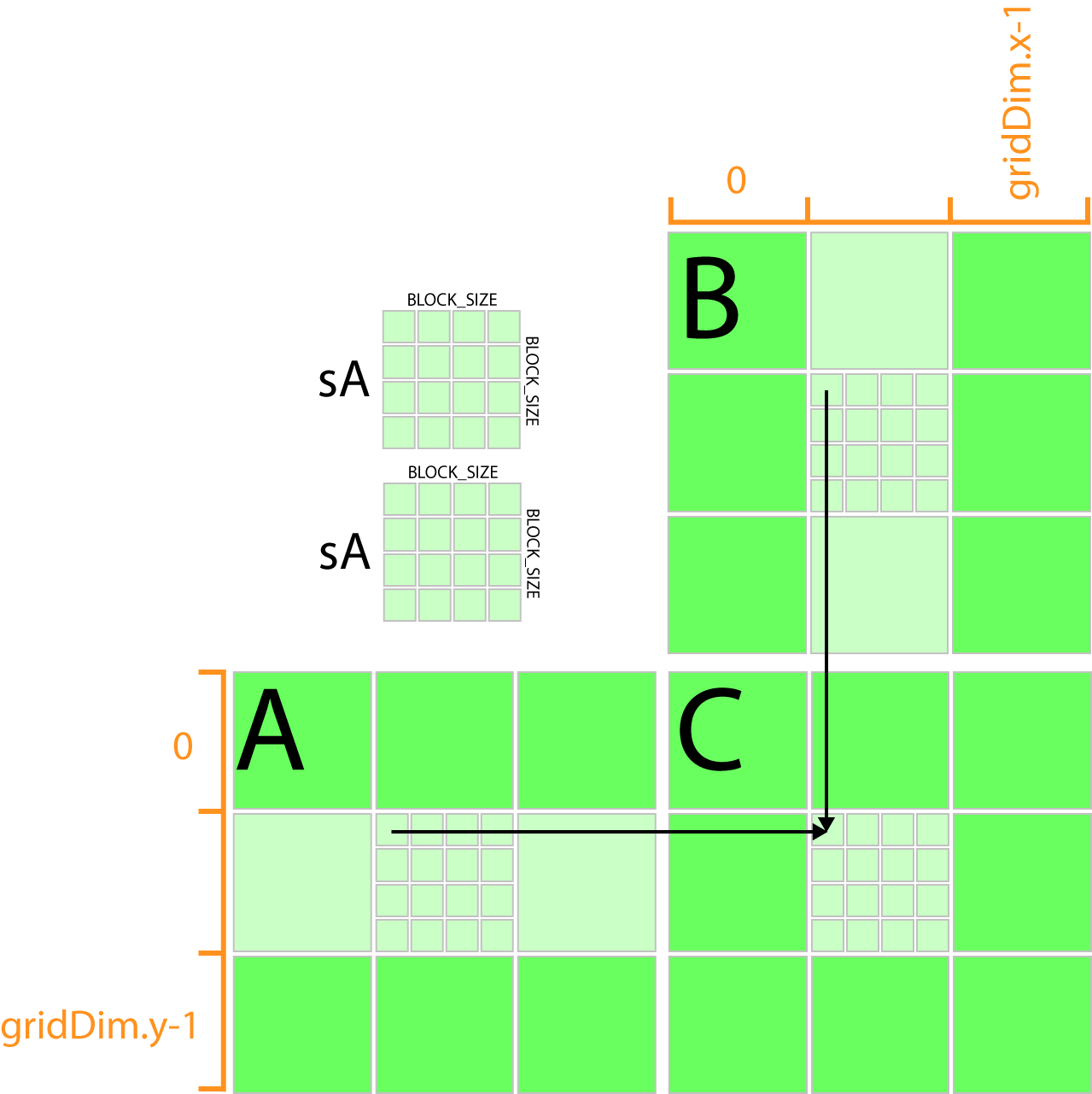

Size BLOCK_SIZE.

Cuda matrix multiplication size. CPU float malloc vector_size. Dim3 block BLOCK_SIZE BLOCK_SIZE. Size_t size Awidth Aheight sizeoffloat.

Allocate array on host for CPU_matrix_multiplication result. CudaMalloc void. Allocate array on device for LHS operand.

Int ty threadIdx. PrintfCopy A to device. Err cudaMemcpyd_Aelements Aelements size cudaMemcpyHostToDevice.

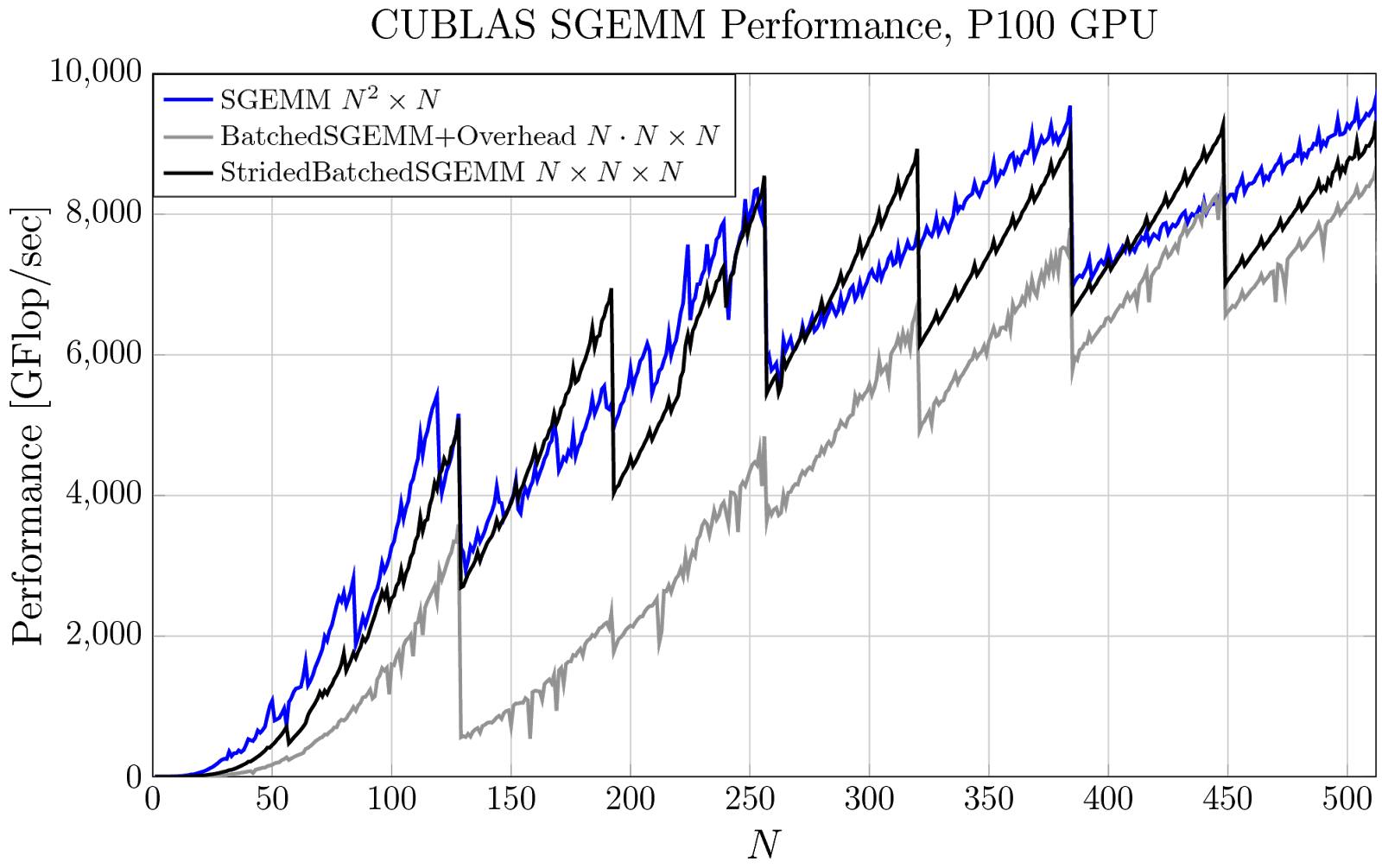

Size BLOCK_SIZE 1. And cuDNN 763 Tensor Cores may be used regardless but efficiency is better when matrix dimensions are multiples of 16 bytes. CudaError_t err cudaMalloc.

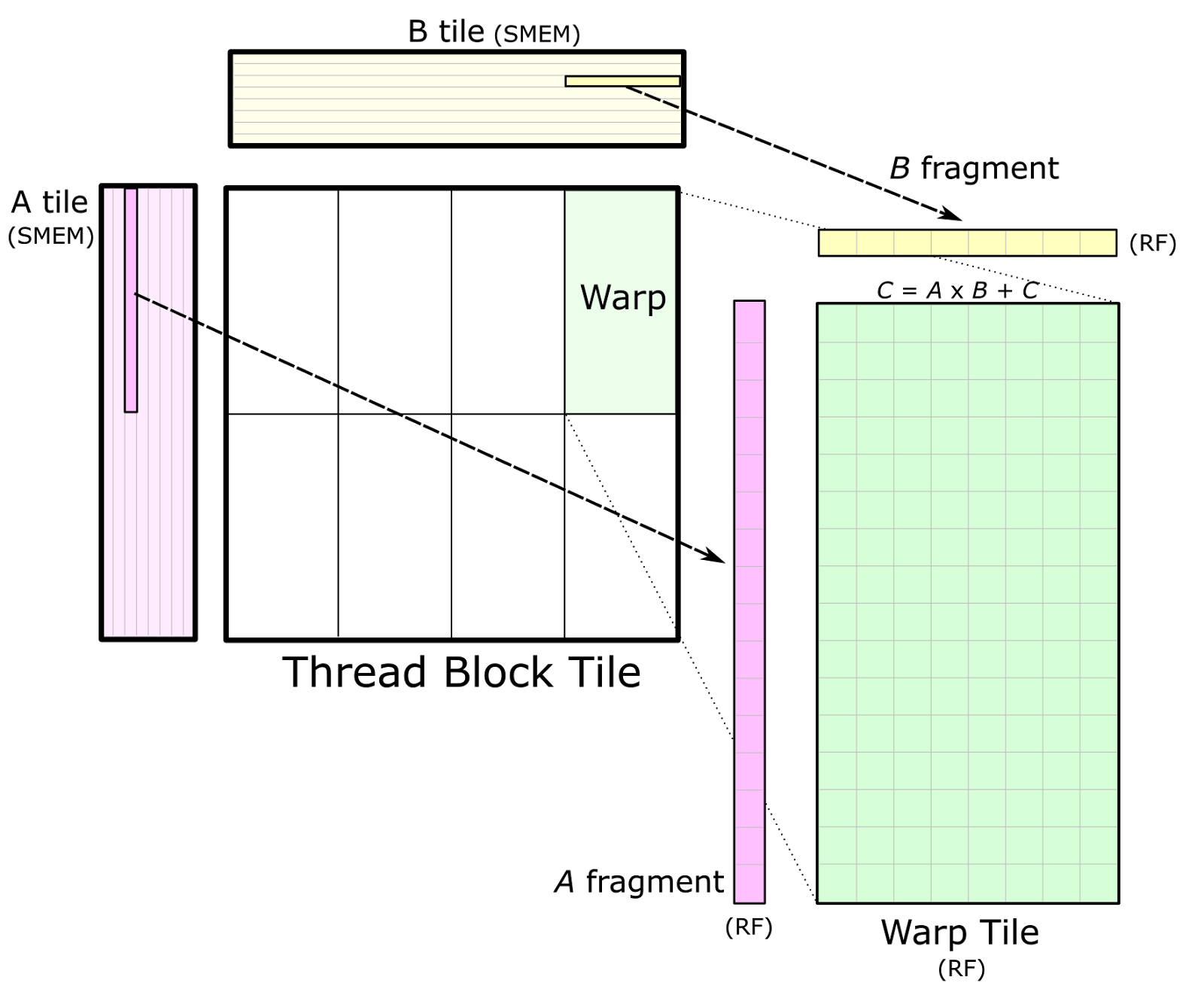

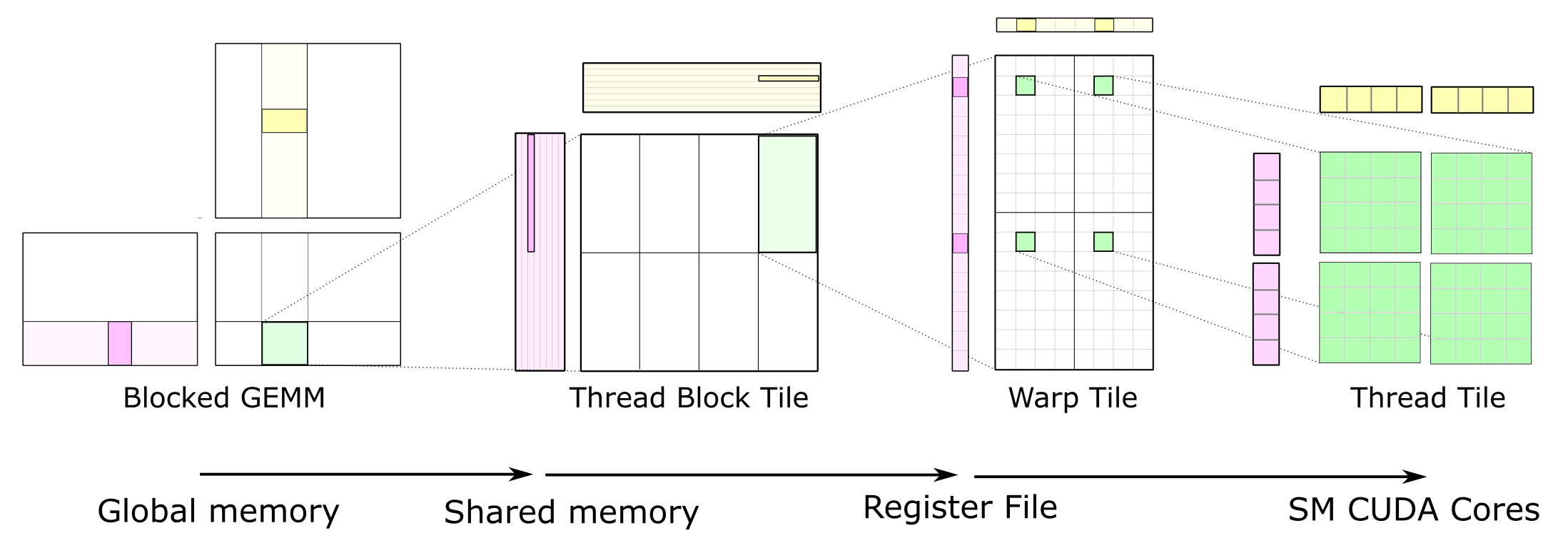

CUDA Programming Guide Version 11 67 Chapter 6. Writefile matmul_sharedcu define WIDTH 4096 __global__ void matmul_kernel float C float A float B __shared__ float sA block_size_yblock_size_x. D_Awidth d_Astride Awidth.

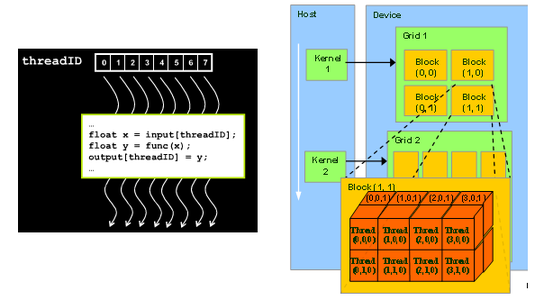

The formula used to calculate elements of d_P is d_Pxy 𝝨. Each thread block is responsible for computing one square sub-matrix C sub of C. Int tx threadIdx.

Random facts about NCSA systems GPUs and CUDA QP Lincoln cluster configurations Tesla S1070 architecture Memory alignment for GPU CUDA APIs Matrix-matrix multiplication example K1. Include helper_cudah Matrix multiplication CUDA Kernel on the device. Int Col blockDimxblockIdxx threadIdxx.

Int x blockIdx. Float sum 00. Perform CUDA matrix multiplication.

Void MatMulconst Matrix A const Matrix B Matrix C Load A and B to device memory Matrix d_A. Example of Matrix Multiplication 61 Overview The task of computing the product C of two matrices A and B of dimensions wA hA and wB wA respectively is split among several threads in the following way. K numAColumns - 1 Tile_size 1.

Csrmul_kernelAp Aj Av num_rows x y. D_Awidth Awidth. So an individual element in C will be a vector-vector.

Y block_size_y ty. I thus have a kernel that utilizes tiling. Matrix multiplication between a IxJ matrix d_M and JxK matrix d_N produces a matrix d_P with dimensions IxK.

Or any size up to 512 unsigned int nblocks num_rows blocksize 1 blocksize. Each thread within the block is responsible for. For int k 0.

Int y blockIdx. X block_size_x tx. 4 Code for Matrix Multiplication using Shared Memory36 41 multShareh.

Fprintf stderr Matrix multiplication on CPU took 12f seconds n floatafter - before 1000000000. I am studying matrix multiplication and its various different ways in which it can be optimized on a GPU. Float Cvalue 00.

Size_t sizeA Awidth Aheight sizeoffloat. __shared__ float sB block_size_yblock_size_x. But when the matrix size exceeds 320 like 321 the matrix product produced by GPU is not equal to the result by CPU.

Allocate array on device for RHS operand but this is vector 1xN. An odd bug The code works well when the matrix size is less than 320320 and requesting block size to be 3232. Int tx threadIdx.

A block of BLOCK_SIZE x BLOCK_SIZE CUDA threads. For example when using FP16 data each FP16 element is represented by 2 bytes so matrix dimensions would need to be multiples of 8 elements for best efficiency or 64 elements on A100. Int by blockIdx.

D_Bwidth Bwidth. The difference between them is very tiny like the scale of 1e-5. Size_t sizeB Bwidth Bheight sizeoffloat.

Int bx blockIdx. C A B wA is As width and wB is Bs width template __global__ void MatrixMulCUDA float C float A float B int wA int wB Block index. Matrix multiplication is simple.

Parallel sparse matrixvector multiplication unsigned int blocksize 128. The code to launch the above parallel kernel is. CudaMalloc void.

For k 0. Matrix-matrix multiplication with blocks Ckl i1 N Aki Bil C kl i1 N2 Aki Bil iN2 1 N Aki Bil For each element Set result to zero For each pair of blocks Copy data Do partial sum Add result of. CudaMemcpyd_Aelements Aelements sizeA cudaMemcpyHostToDevice.

Dim size BLOCK_SIZE 0. K if Row numARows threadIdxx kTile_size numAColumnsCopy Data to Tile from Matrix Global Memory to Shared Memory sAthreadIdxythreadIdxx ARownumAColumns threadIdxx kTile_size.

Using The Cuda Programming Model Leveraging Gpus For

Cs Tech Era Tiled Matrix Multiplication Using Shared Memory In Cuda

Cuda Memory Model 3d Game Engine Programming

Cuda Tiled Matrix Multiplication Explanation Stack Overflow

Https Edoras Sdsu Edu Mthomas Sp17 605 Lectures Cuda Mat Mat Mult Pdf

Matrix Multiplication Using Cuda

Pro Tip Cublas Strided Batched Matrix Multiply Nvidia Developer Blog

Massively Parallel Programming With Gpus Computational Statistics In Python 0 1 Documentation

Opencl Matrix Multiplication Sgemm Tutorial

5kk73 Gpu Assignment Website 2014 2015

Matrix Multiplication In Cuda A Simple Guide By Charitha Saumya Analytics Vidhya Medium

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

Multiplication Kernel An Overview Sciencedirect Topics

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

Simple Matrix Multiplication In Cuda Youtube

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Introduction To Cuda Lab 03 Gpucomputing Sheffield

Running A Parallel Matrix Multiplication Program Using Cuda On Futuregrid

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog