Faster Matrix Multiplication Via Sparse Decomposition

Journal of Symbolic Computation 1990. Scan queues to compute NST and concatenate the result to obtain ET end of TRANSPOSE Two subroutines one called PACKING for converting a conventional.

Pdf Tensor Decompositions And Applications Semantic Scholar

Spencer 1942 to get an algorithm with running time ˇ On2376.

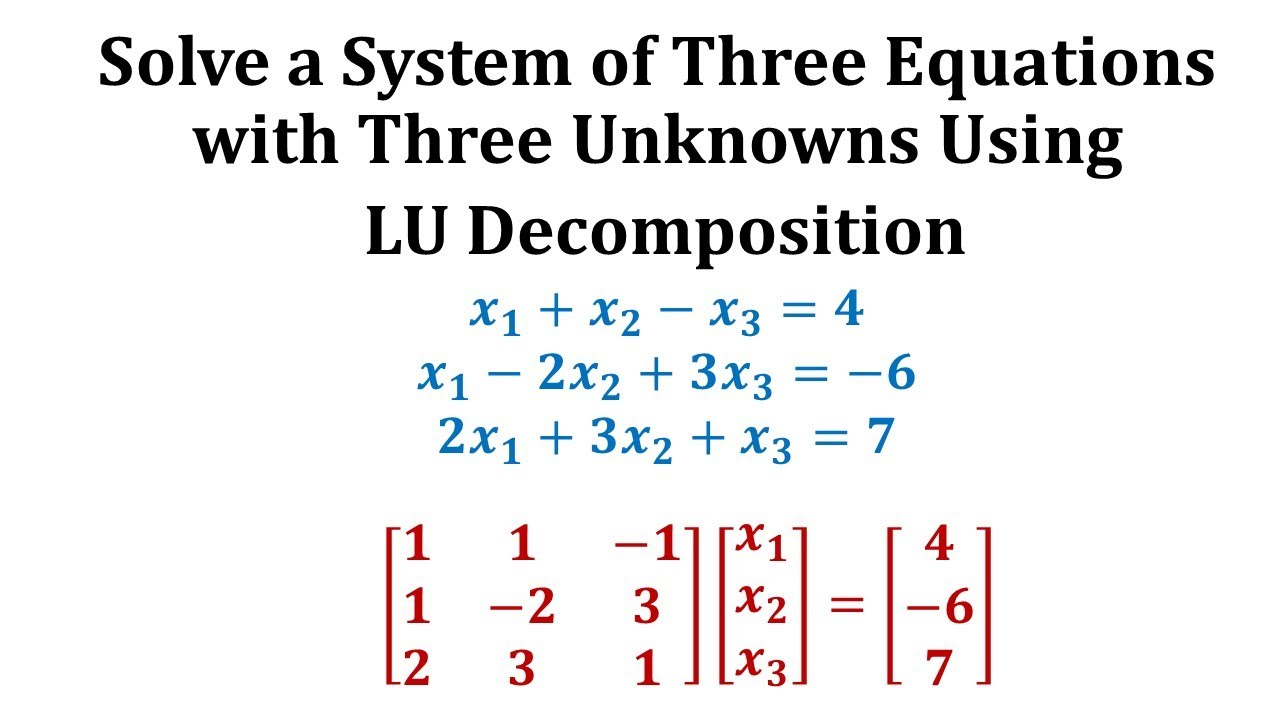

Faster matrix multiplication via sparse decomposition. W_plus_bias tfconcat1 W tfconvert_to_tensoruser_bias dtypefloat32 nameuser_bias tfonesnum_users1 dtypefloat32 nameitem_bias_ones To the item matrix we add a row of 1s to multiply the user bias by and a bias row holding the bias of each item. In numerical analysis and linear algebra lowerupper LU decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix. Many algorithms with low asymptotic cost have large leading coefficients and are thus impractical.

More information on the fascinat-. Statistical Analysis and Data Mining. DnumGood for int r0.

C valuemodAdd value modMultiply vandermondeget r c data dc. In this paper we present an algorithm that solves linear systems in sparse matrices asymptotically faster than matrix multiplication for any 2. T XR r1 ur vr wr Heres an encoding of our matrix multiplication algorithm.

The cur-rently fastest matrix multiplication algorithm with a complexity of On238 was obtained by Coppersmith and Winograd 1990. Thus the data reuse for the inner product approach is Onnz 0N2. In short I need to compute the following triple sparse matrix product.

Fast matrix multiplication algorithms are of practical use only if the leading coefficient of their arithmetic complexity is sufficiently small. Fast and stable matrix multiplication p744. Consequently Strassen-Winograds On log 2 7 algorithm often outperforms other fast matrix multiplication algorithms for all feasible matrix dimensions.

I can make this faster by manually inlining the matrix multiplication. This speedup holds for any input matrix A with op n. Fast sparse matrix multiplication Step 1.

Following Zienkiewiczs Finite Element Method text I am solving a linear elasticity problem with linked subdomains. As the number of non-zeros in the output matrix is nnz0 the probability that such index matching produces a useful output ie any of the two indices actually matched is nnz0 N2. Fast sparse matrix multiplication Raphael Yuster y Uri Zwick z Abstract Let A and B two n n matrices over a ring R eg the reals or the integers each containing at most m non-zero elements.

Karstadt and Schwartz have recently demonstrated a technique that reduces the leading coefficient by introducing fast On2 łog n basis. The product sometimes includes a permutation matrix as well. Used a thm on dense sets of integers containing no three terms in arithmetic progression R.

The leading coefficient of Strassen-Winograds algorithm has been generally believed to be optimal for matrix multiplication algorithms with a 2 2 base case due to the lower bounds by Probert 1976 and Bshouty 1995. 1 logp p Aqqq non-zeros where p Aq is the condition number of A. Many improvements then followed.

LU decomposition can be viewed as the matrix form of Gaussian eliminationComputers usually solve square systems of linear equations using LU. Fast matrix multiplication algorithms are of practical use only if the leading coefficient of their arithmetic complexity is sufficiently small. The key observation is that multiplying two 2 2 matrices can be done with only 7 multiplications instead of the usual 8 at the expense of several additional addition and subtraction operations.

For output r ovalue. Strassens algorithm improves on naive matrix multiplication through a divide-and-conquer approach. Fast Sparse Matrix Multiplication 3 1969 was the first to show that the naıve algorithm is not optimal giving an On281 algorithm for the problem.

We present a new algorithm that multiplies A and B using Om07n12 n2o1 alge- braic operations ie multiplications additions and subtractions over RThe naive matrix multiplication. T1a2b XR r1 urvrwr1a2b XR r1 h aTurbTvr i wr c Ballard 11. Matrix multiplication using low-rank decomposition Heres the matrix multiplication as tensor operation again.

We improve the current best running time value to invert sparse matrices over finite fields lowering it to an expected On22131 time for the current values of fast rectangular matrix multiplication. T 1 a2 b c Heres our low-rank decomposition. Sparse Graph Mining with Compact Matrix Decomposition.

This approach reads a row of sparse matrix Aand column of sparse matrix B each of which has nnz N non-zeros and performs index matching and MACs. Matrix multiplication via arithmetic progressions. R Byte value0.

Initialize n empty queues. 2008 Tensor-CUR Decompositions for Tensor-Based Data. 2008 Less is More.

05C50 05C85 65F50 68W10 Submitted to the journals Software and High-Performance Computing section October 5 2015. Many algorithms with low asymptotic cost have large leading coefficients and are thus impractical. The ASA Data Science Journal 1 1 6-22.

Open problem even for sparse linear systems with polyp nq condition number. Scan EAi in increasing order of i use SA to calculate the row and column number of EAi and put EAi into the jth queue if it is in the j-th column of A. We achieve the same running time for the computation of the rank and nullspace of a sparse matrix over a finite field.

Parallel computing numerical linear algebra sparse matrix-matrix multiplication 25D algorithms 3D algorithms multithreading SpGEMM 2D decomposition graph algorithms AMS subject classi cations. Karstadt and Schwartz have recently demonstrated a technique that reduces the leading coefficient by introducing fast O n 2 łog n basis transformations.

Fourier Decomposition Graphical Physics And Mathematics Applied Science Studying Math

Solve A System Of Linear Equations Using Lu Decomposition Youtube

A Literature Survey Of Matrix Methods For Data Science Stoll 2020 Gamm Mitteilungen Wiley Online Library

Matrix Factorization An Overview Sciencedirect Topics

Tensor Robust Principal Component Analysis With A New Tensor Nuclear Norm

Pdf Tensor Decompositions And Applications Semantic Scholar

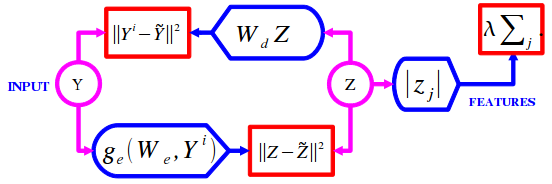

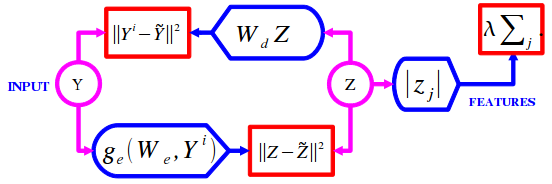

Cbll Research Projects Computational And Biological Learning Lab Courant Institute Nyu

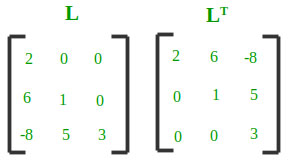

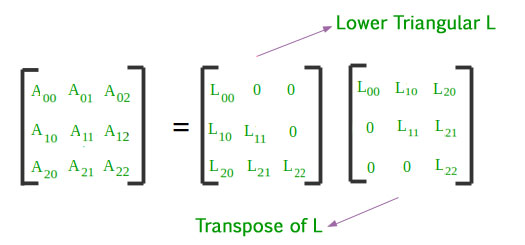

Cholesky Decomposition Matrix Decomposition Geeksforgeeks

Butterflies Are All You Need A Universal Building Block For Structured Linear Maps Stanford Dawn

Low Rank And Sparse Matrix Decomposition Via The Truncated Nuclear Norm And A Sparse Regularizer Springerlink

Cholesky Decomposition Matrix Decomposition Geeksforgeeks

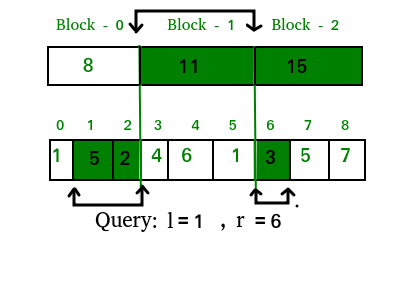

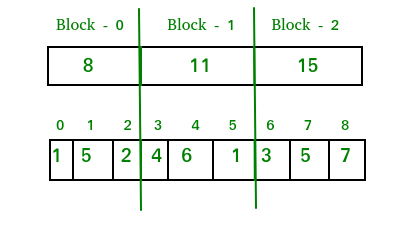

Sqrt Or Square Root Decomposition Technique Set 1 Introduction Geeksforgeeks

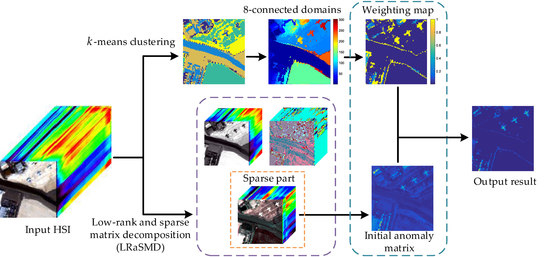

Remote Sensing Free Full Text Low Rank And Sparse Matrix Decomposition With Cluster Weighting For Hyperspectral Anomaly Detection Html

Sqrt Or Square Root Decomposition Technique Set 1 Introduction Geeksforgeeks

An Efficient Sparse Matrix Format For Accelerating Regular Expression Matching On Field Programmable Gate Arrays Jiang 2015 Security And Communication Networks Wiley Online Library