Cache Blocking Matrix Multiplication C Code

The number of capacity misses clearly depends on the dimension parameters. By default the function does nothing so the benchmark function will report an error.

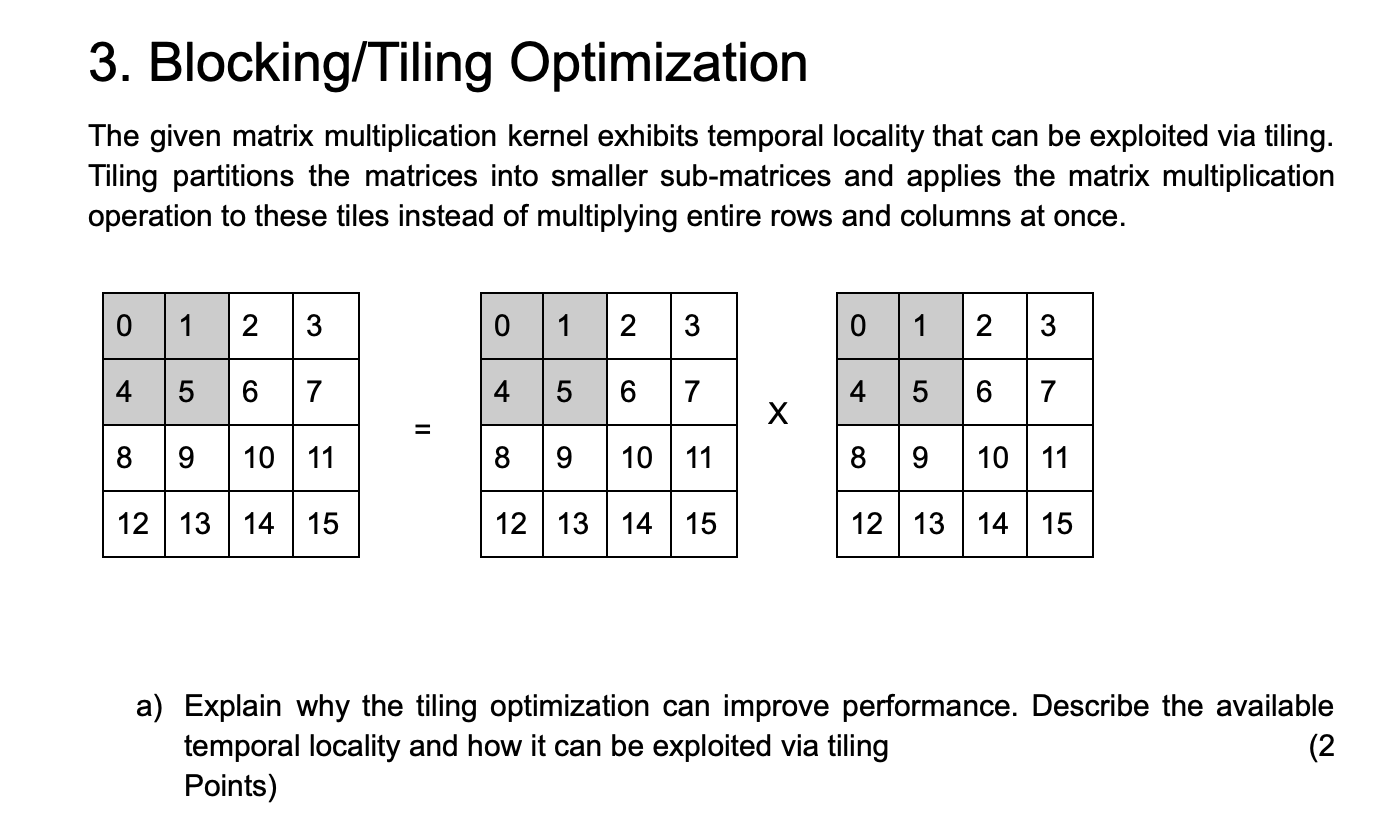

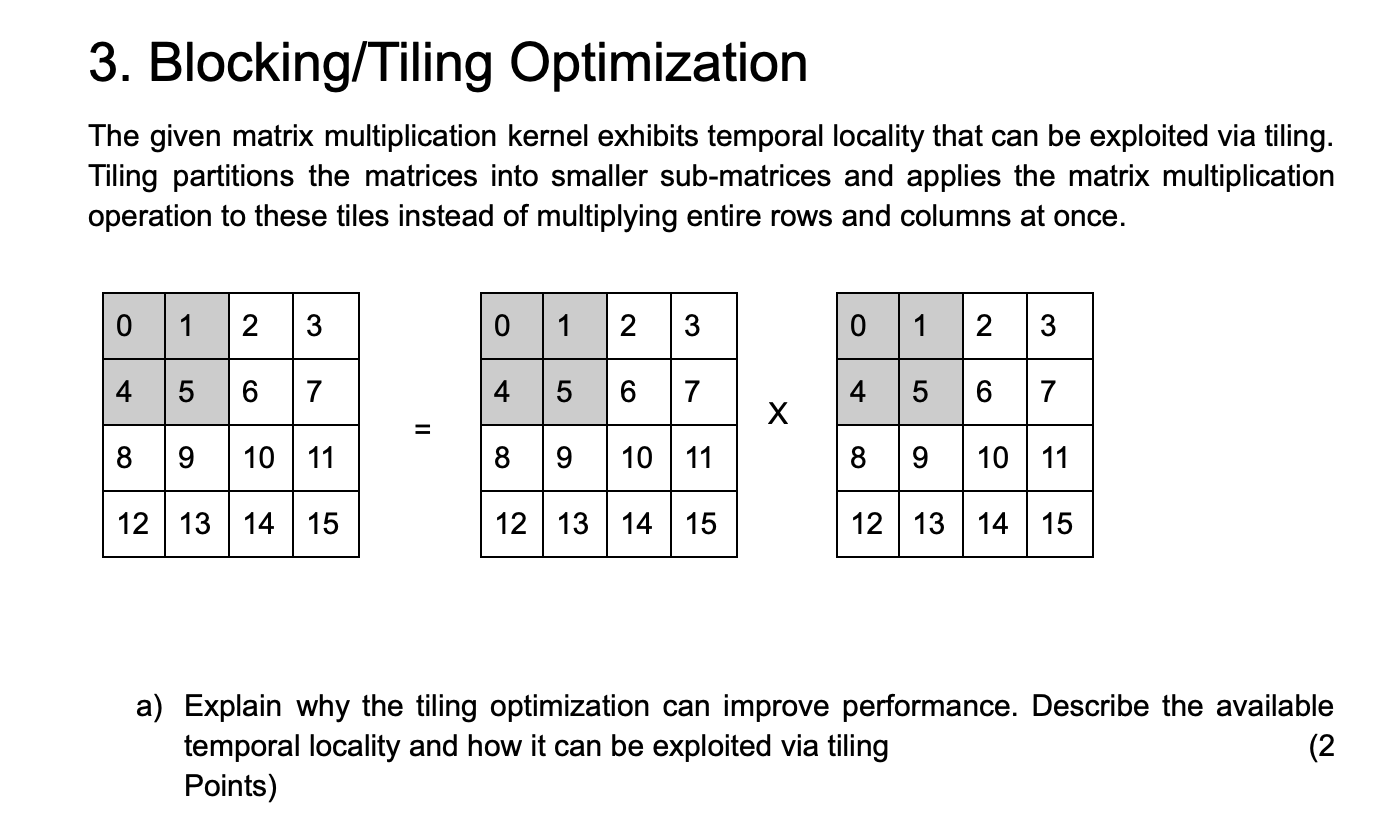

3 Blocking Tiling Optimization The Given Matrix Chegg Com

In this video well start out talking about cache lines.

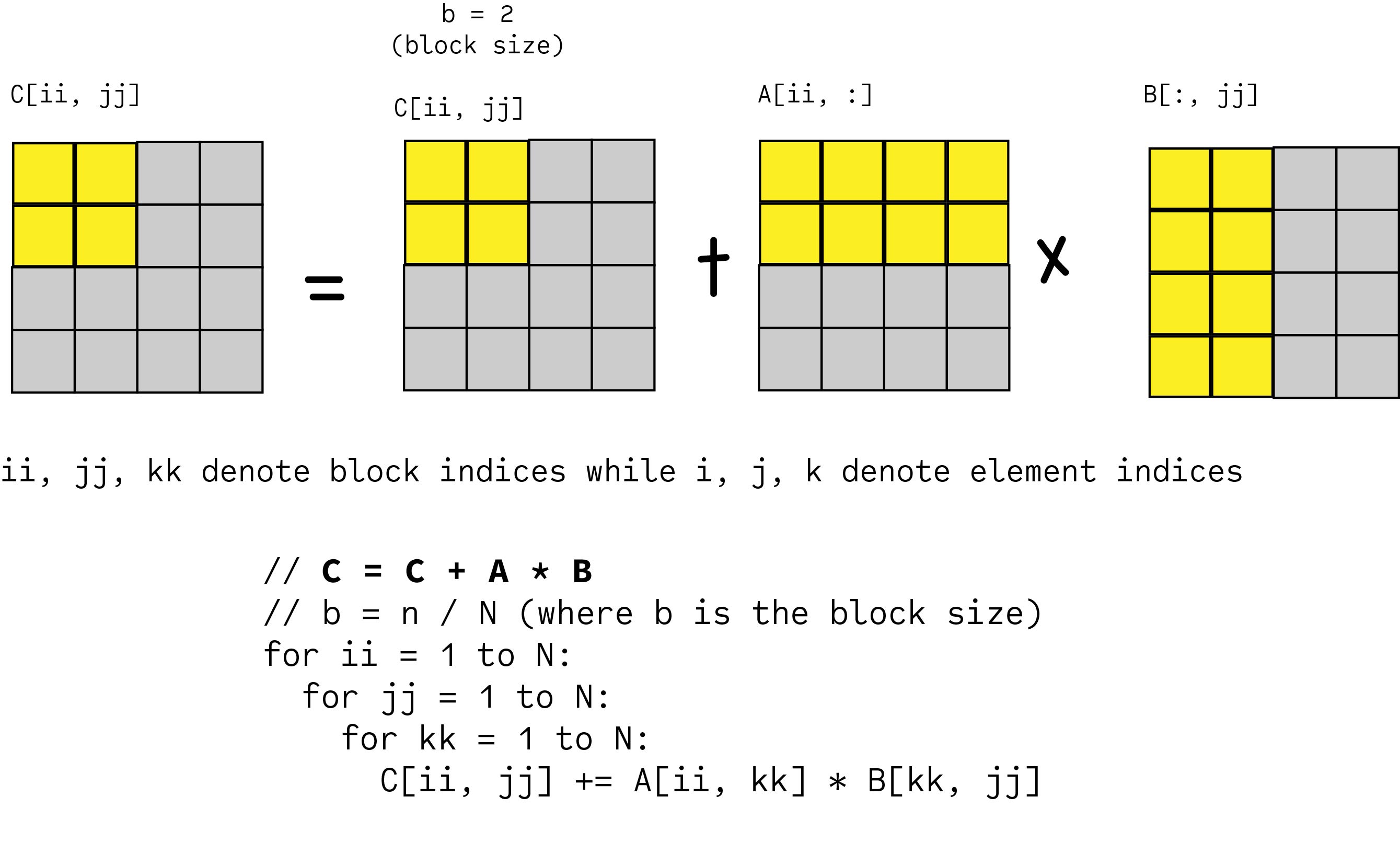

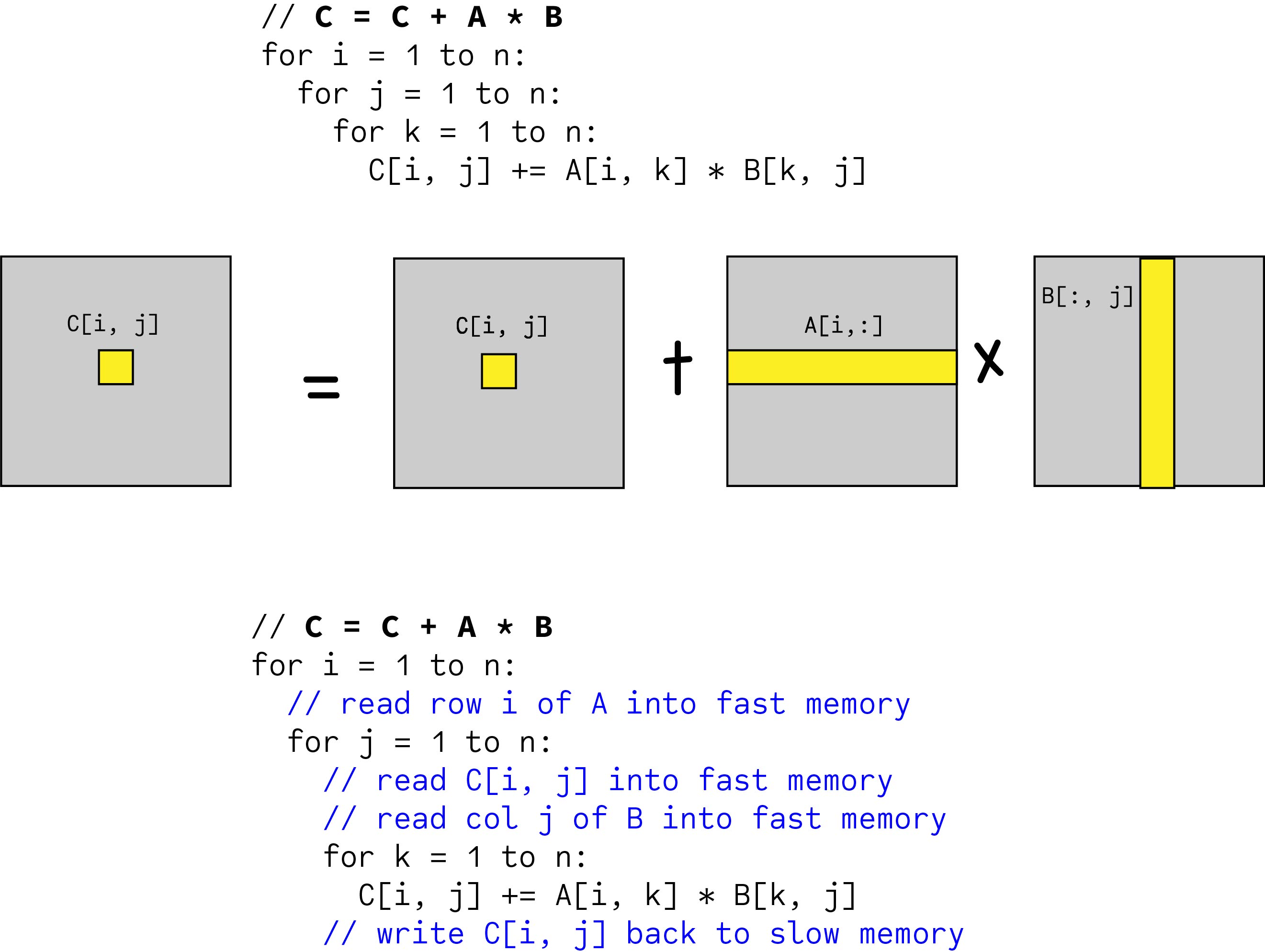

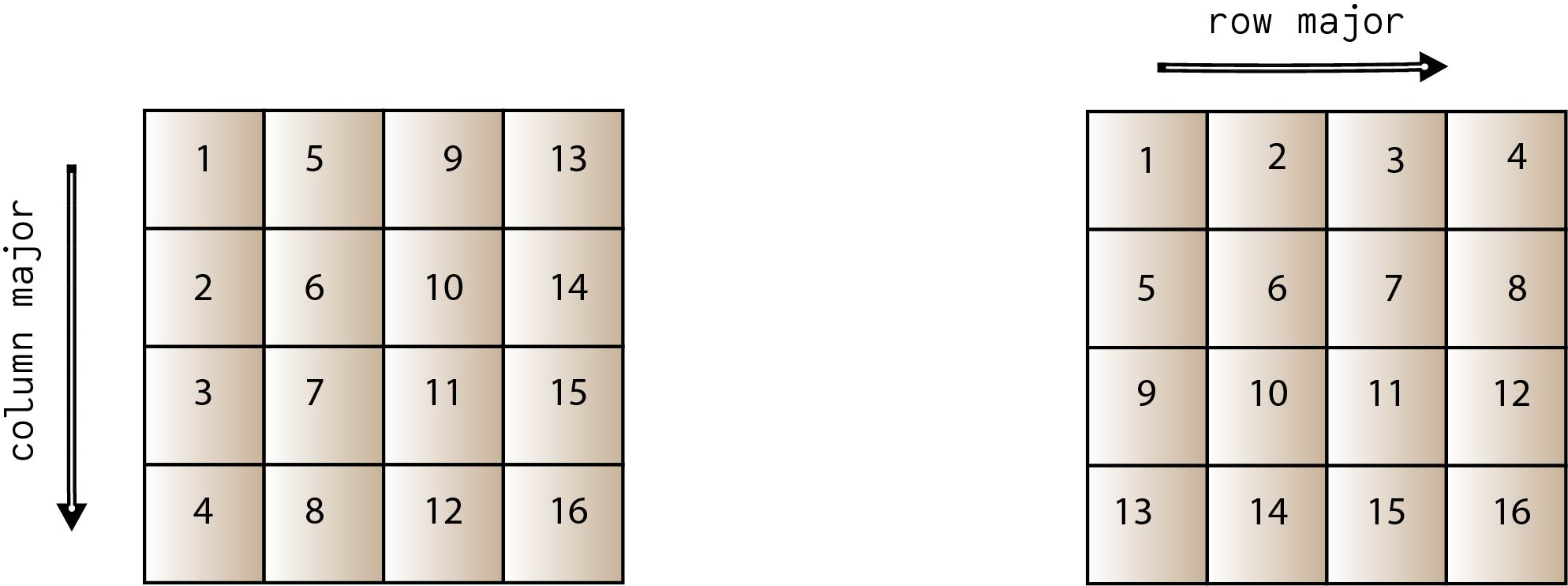

Cache blocking matrix multiplication c code. 2 n 2 read and write of each block of C 2 N 2 n N 2 2 num of times elems per block m 2N 2 n 2 Therefore the computational intensity is. Matrices are in column major order. As such we are constantly accessing new values from memory and obtain very little reuse of cached data.

The number of times we need to bring a block into the cache is nB3. Used twice Cuts the numbers of loads in half But requires enough registers to hold all items 4 registers for aIk aI1k bkj bkj1 plus 2 registers for I j and 4 registers for address of aIk address of bkj address of cIj and address of cIj. C xxtx yyty by the NumPy notation can be computed by the corresponding rows of A and columns of B.

C xxtx yyty npdot A xxtx B yyty We can further decompose this matrix multiplication into multiple small ones. E-6 printf Time lf n elapsed. Below is a simple implementation of matrix multiplication given in mmcpp.

To bring a block of X or Y into the cache we require B2c cache misses. The two inner loops read all p by n elements of B and access the same p elements in a row of A repeatedly and write one row of n elements of C. Operations to small matrices usually suffer from cache effects.

Looped over various size parameters. 24 March Due date. Pressed sparse row CSR format 8.

Your task is to implement cache blocking in the transpose_blocking function inside transposec. You may NOT assume that the matrix width n is a multiple of the blocksize. Any suggestions would be appreciated.

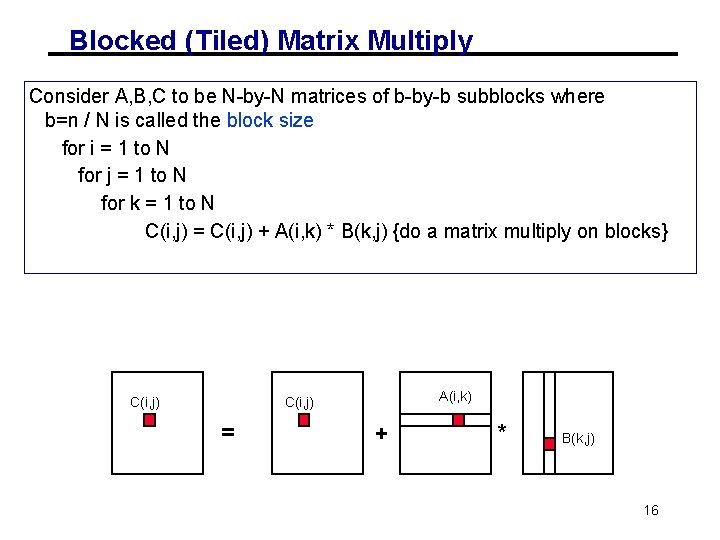

We can improve the amount of data reuse in the caches by implementing a technique. By contrast cache-oblivious algorithms are designed to make efficient use of cache without explicit blocking. CIJ CIJ AIKBKJ.

I would keep the findMin function separate instead of inlining it in the loop test. You could also implement andor test the inner two for loops separately since they will be for single-block matrix multiplication. Ci1j1 ai1k bkj1 Each aij etc.

A simple version that assumes that the array size n is an integral multiple of the block size bsize. If the matrix is small enough to fit in one or just a few cache lines it is usually faster to copy the source matrices -- for example direct copy of the left-side matrix and trasposed copy of the right side matrix -- to maximize cache locality during the operation. I L1 cache blocking I Copy optimization to aligned memory I Small 8 8 8 matrix-matrix multiply kernel found by automated search.

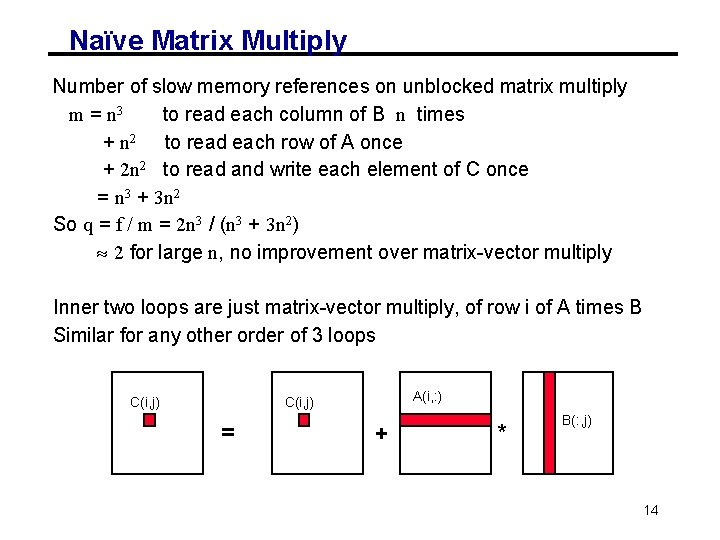

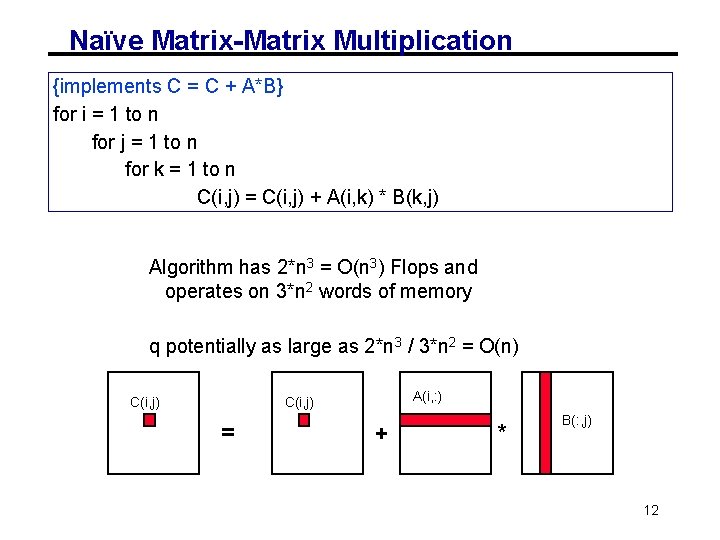

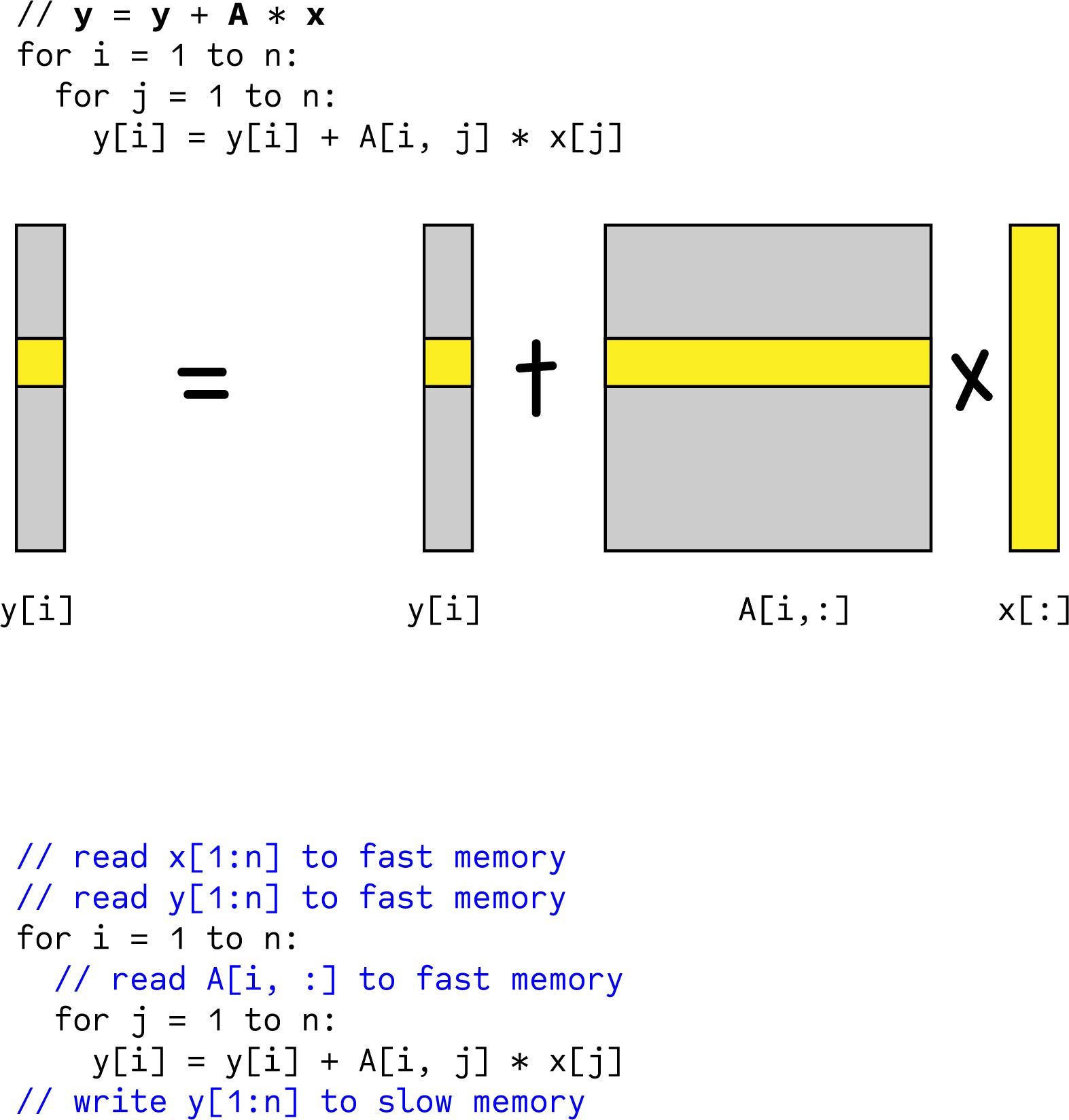

This is where we split a large problem into small. Matrix Multiplication and Cache Friendly Code COMP 273 Winter 2021 Assignment 4 Prof. In the above code for matrix multiplication note that we are striding across the entire A and B matrices to compute a single value of C.

I am trying to optimize matrix multiplication on a single processor by optimizing cache use. Tv_sec double tv2. A B C kk jj jj kk b size bsize bsize b ize 1 1 i i Use bsize x bsize block n times in succession Use 1 x bsize row sliver bsize times Update successive elements of 1 x bsize row sliver.

End store block CIJ end end Following the previous analysis we nd there are 2N2 2N3 loads and stores of blocks with this algorithm. The math behind it is that a block of C eg. Cache blocking breaks the CSR matrix into multiple smaller rcache x ccache CSR matrices and then stores these sequen-tially in memory.

Void serial_multiplydouble A double B double C int size for int i 0. After you have implemented cache blocking you can run your code by typing. My last matrix multiply I Good compiler Intel C compiler with hints involving aliasing loop unrolling and target architecture.

Elapsed double tv2. Block Matrix Multiplication Lab. Below we discuss how 1we compress the size of each block using the row startend RSE optimization and 2 further exploit the fact that each cache block is a smaller matrix.

After that we look at a technique called blocking. Many large mathematical operations on computers end up spending much of their time doing matrix multiplication. 12 April 1 Introduction In this assignment you will write code to multiply two square n n matrices of single precision floating point numbers and then optimize the code to exploit a memory cache.

Books with either fortran or matlab code sometimes have 1 based indexing assumed whereas cc uses 0 based indexing. I for int j 0. This lab involves timing the performance of a blocked matrix multiplication algorithm using various block sizes.

M n p and the size of the cache. I am implemented a block multiplication and used some loop unrolling but Im at a loss on how to optimize further though it is clearly still not very optimal based on the benchmarks. Load block CIJ into fast memory for k 1N load blocks AIK and BKJ into fast memory K k-1N1kN.

R R A I K B K J. J for int k 0. C I J R.

K Ci j Ai k Bk j. Since each block has size b b this gives. Total number of cache misses 2n3Bc.

A Makefile has been included to compile both the report and experiment program. Make ex3 transpose Where n the width of the matrix. This is the second lab for my Parallel Processing course during the Fall 2013 Semester.

Optimal Sequence Of Block Multiplications For The Matrix Multiplication Download Scientific Diagram

Optimizing C Code With Neon Intrinsics

Optimizing Cache Performance In Matrix Multiplication Ucsb Cs

Blocked Matrix Multiplication Malith Jayaweera

Optimizing Cache Performance In Matrix Multiplication Ucsb Cs

Best Block Size Value For Block Matrix Matrix Multiplication Stack Overflow

Matrix Multiplication Tiled Implementation Youtube

Optimizing Cache Performance In Matrix Multiplication Ucsb Cs

The Structure Of A Matrix Multiplication Operation Using The Blis Download Scientific Diagram

Cs61c Summer 2013 Project 2 Matrix Multiply Parallelization

Blocked Matrix Multiplication Malith Jayaweera

Blocked Matrix Multiplication Malith Jayaweera

Blocked Matrix Multiplication Malith Jayaweera

Parallel Matrix Multiplication C Parallel Processing By Roshan Alwis Tech Vision Medium

Performance X64 Cache Blocking Matrix Blocking Youtube

Github Xxycrab Matrix Multiplication With Cache Blocking And Avx Matrix Multiplicatoin Optimized With 3 Level Cache Block And Vectorization