Sparse Matrix Vector Multiplication Gpu

Sparse Matrix-Vector Multiplication on GPGPUs SALVATORE FILIPPONE Cran eld University VALERIA CARDELLINI DAVIDE BARBIERI ALESSANDRO FANFARILLO Universit a degli Studi di Roma Tor Vergata Abstract The multiplication of a sparse matrix by a dense vector SpMV is a centerpiece of scienti c computing applications. Sparse matrix-vector multiplication SpMV is an important operation in computational science and needs be accelerated because it often represents the dominant cost in many widely used iterative methods and eigenvalue problems.

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium

In recently years graphics processing units GPUs have brought a new chance to high.

Sparse matrix vector multiplication gpu. We denote nnzA as the number of nonzeros in sparse matrix A. We implement two novel algorithms for sparse-matrix dense-matrix multiplication SpMM on the GPU. We implement two novel algorithms for sparse-matrix dense-matrix multiplication SpMM on the GPU.

1 where C2Rm n. Our algorithms expect the sparse input in the popular compressed-sparse-row CSR format and thus do not require expensive format conversion. I This is a growing market and need I CPUAcceleratorGPUMIC delivers high perf.

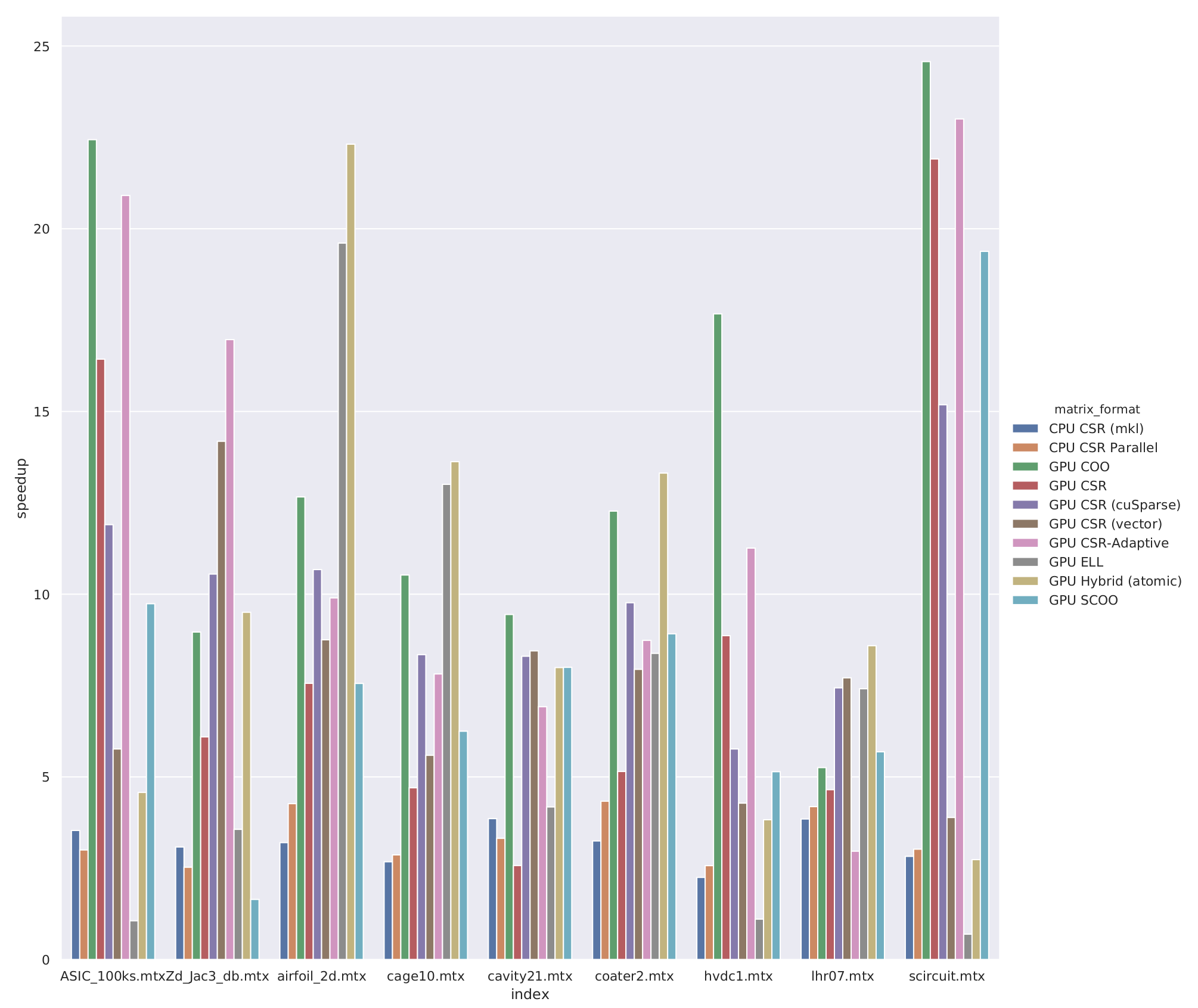

Performance on GPU is measured for CSR CSR-Vector CSR-Adaptive ELL COO SCOO HYB matrix formats. Import cupy as cp from cupyxscipysparse import csr_matrix as csr_gpu A some_sparse_matrix scipysparsecsr_matrix x some_dense_vector numpyndarray A_gpu csr_gpuA moving A to the gpu x_gpu cparrayx moving x to the gpu for i in rangeniter. Sparse matrix--matrix multiplication SpGEMM is a key operation in numerous areas from information to the physical sciences.

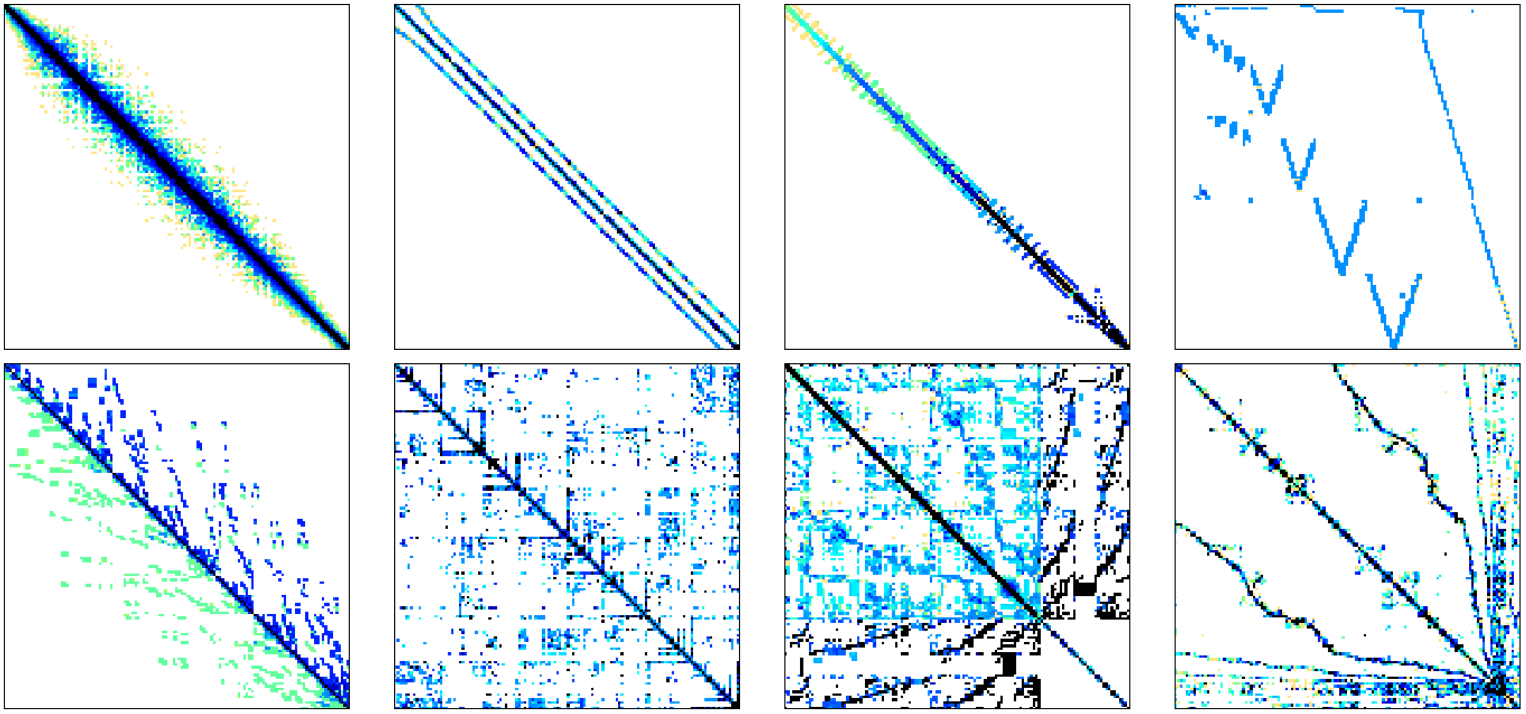

102 12 2015 1784--1814. Implementing SpGEMM efficiently on throughput-oriented processors such as the graphics processing unit GPU requires the programmer to expose substantial fine-grained parallelism while conserving the limited off-chip memory bandwidth. Compressed sparse row CSR is the most frequently used format to store sparse matrices.

Multiple sparse matrix formats are available as well as their associated sparse matrix-vector multiplication SpMV implementations on the CPU and GPU. Here you can find some performance results for Sparse Matrix-Vector multiplication on CPU and GPU. It is the essential kernel.

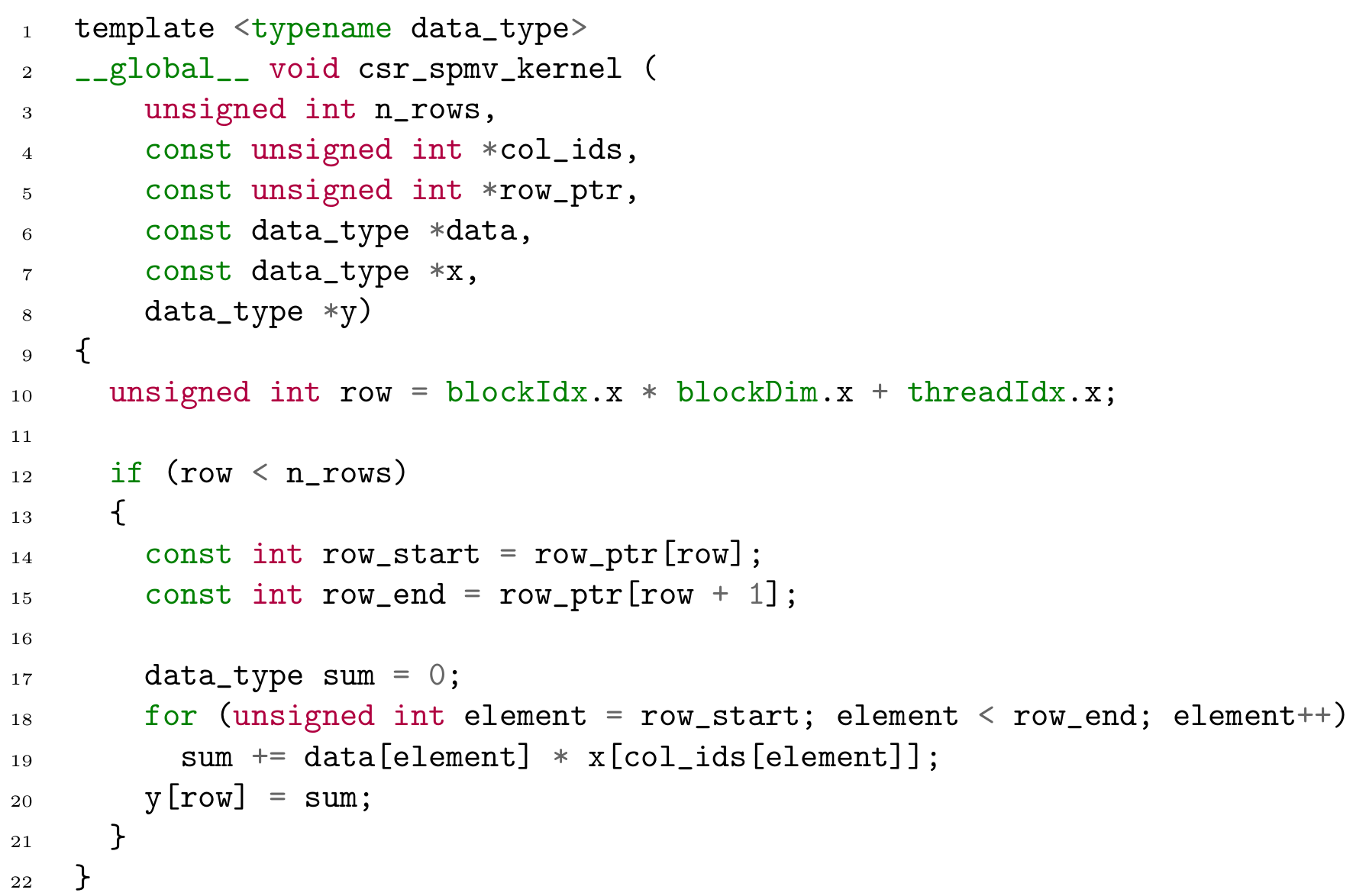

More generally SpMxV can. In this paper we discuss data structures and algorithms for SpMV that are e ciently implemented on the CUDA platform for the ne-grained parallel architecture of the GPU. Numerical experiments show that the TV-ADM with the presented acceleration strategy could achieve a 96 times speedup.

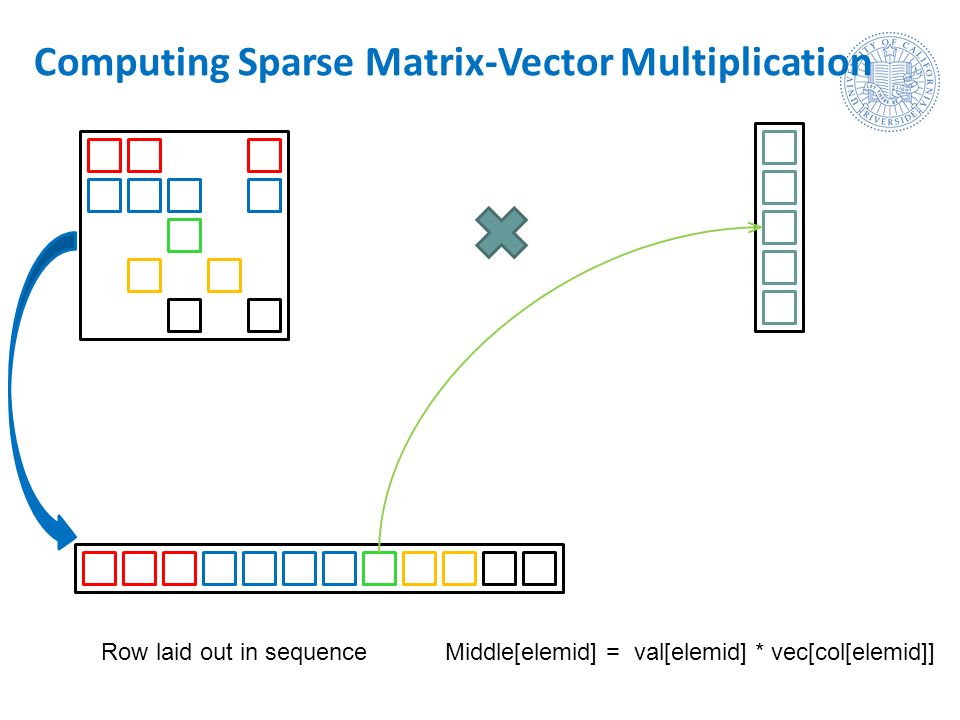

SPARSE MATRIX-VECTOR MULTIPLICATION SpMxV is a mathematical kernel that takes the form of. A new sparse matrix vector multiplication graphics processing unit algorithm designed for finite element problems. Outline Intro and Motivation Sparse Matrices Matrix Formats SpMV Parallel SpMV Performance Conclusion Extra Notes Parallel Computing I Parallel hardware is everywhere.

Thats because of the irregular data accesses pattern brought by sparse data structures. Research on sparse matrix vector multiplication SpMV also shows similar behavior 3-8. Optimizing SpMM for the GPU 4 21 SpMM Given two sparse matrices A2Rm k and B2Rk n for kmn2N SpMM multiplication computes C AB.

In the final figure you could find results for single. Matrix-matrix multiplication in sparse cases is not comparable to dense cases. The sparsity of Aand Bimplies that both input matrices are represented in a space-e cient format that avoids storing explicit zero values.

We achieve this objective by proposing a novel SpMV algorithm based on the compressed sparse row CSR on the GPU. Finally based on the parallel features the TV-ADM is computed with Graphics Processing Unit GPU. In iterative methods for solving sparse linear systems and eigenvalue problems sparse matrix-vector multiplication SpMV is of singular importance in sparse linear algebra.

Up to 10 cash back Abstract. While previous SpMM work concentrates on thread-level parallelism we additionally focus on. Y Ax 1 where A is an MN sparse matrix the majority of the elements are zero y is an M1 vector and x is an N1 vector.

Our algorithms expect the sparse input in the popular compressed-sparse-row CSR format and thus do not require expensive format conversion. However CSR-based SpMVs on graphic processing units GPUs for example CSR-scalar and CSR-vector usually have poor performance due to irregular memory access patterns. The matrices were taken from the SuiteSparse Matrix Collection formerly the University of Florida Sparse Matrix Collection.

I Good parallel programming is not easy I Parallel programs could be very fast. X_gpu A_gpudotx_gpu x cpasnumpyx_gpu back to numpy object for fast indexing. I Phones Tablets PCs GPUs Xbox PS.

Then a Sparse Matrix Vector multiplication SpMV method is utilized to accelerate the projector and back projector process. The COO and CSR matrix structures are common sparse matrix formats and the HYB format from 8 9 is a hybrid of the ELL and COO formats. Google Scholar Cross Ref.

Sparse matrix-vector multiplication on GPUs requires im-plementations that are carefully optimized for the underly-ing graphics hardware of which the architecture is massively threaded and signi cantly di erent from general CPU archi-tectures. Sparse matrix-vector multiplication SpMV is an important operation in scientific computations. Optimizing sparse matrix vector multiplication using cache blocking method on Fermi GPU.

While previous SpMM work concentrates on thread-level parallelism we additionally focus on. For example for the Nvidia Fermi GPU architec-ture each executable GPU kernel is launched with a xed.

Sparse Matrix Vector Multiplication An Overview Sciencedirect Topics

Efficient Sparse Matrix Vector Multiplication On Gpus Using The Csr Storage Format Youtube

Vector And Bcrs Sparse Matrix Storage Representations On Vector Download Scientific Diagram

Sparse Matrix Vector Multiplication Spmv A Visualization Of The Download Scientific Diagram

Pdf Implementing A Sparse Matrix Vector Product For The Sell C Sell C S Formats On Nvidia Gpus Semantic Scholar

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium

Figure 1 From Scaleable Sparse Matrix Vector Multiplication With Functional Memory And Gpus Semantic Scholar

Sparse Matrix Vector Multiplication And Csr Sparse Matrix Storage Format Download Scientific Diagram

Sparse Matrix Vector Multiplication Parallelization And Vectorization Techenablement

Sparse Matrix Vector Multiplication And Csr Sparse Matrix Storage Format Download Scientific Diagram

Pdf The Sliced Coo Format For Sparse Matrix Vector Multiplication On Cuda Enabled Gpus Semantic Scholar

Figure 1 From Automatically Generating And Tuning Gpu Code For Sparse Matrix Vector Multiplication From A High Level Representation Semantic Scholar

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium

Dense Matrix Vector Vs Sparse Matrix Vector Multiplication Download Scientific Diagram

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium

Irregular Applications Sparse Matrix Vector Multiplication Ppt Video Online Download

Pdf Merge Based Parallel Sparse Matrix Vector Multiplication Semantic Scholar

Sparse Matrix Vector Multiplication Mechanism Download Scientific Diagram

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium