Cuda Matrix Multiplication Non Square

For example multiplying 1024x1024 by 1024x1024 matrix takes 4 times less duration than 1024x1024 by 1024x1023 matrix so I have transformed the matrices to square matrices by equalizing their dimension and filling. The formula used to calculate elements of d_P is.

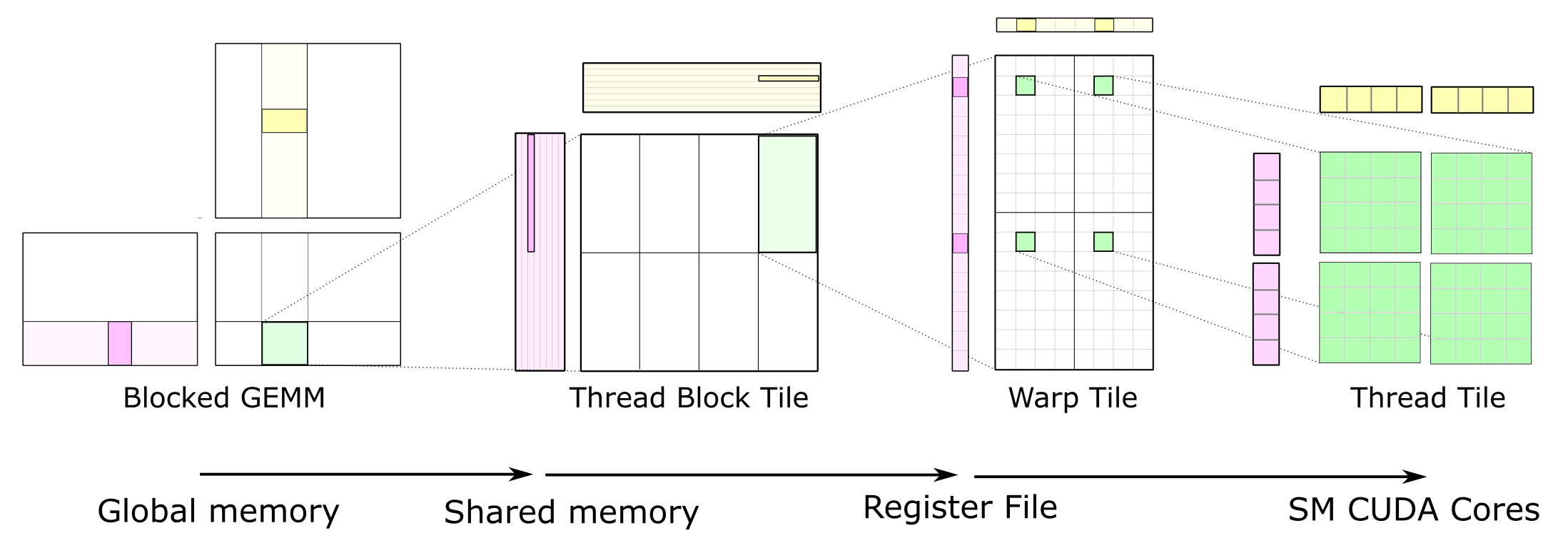

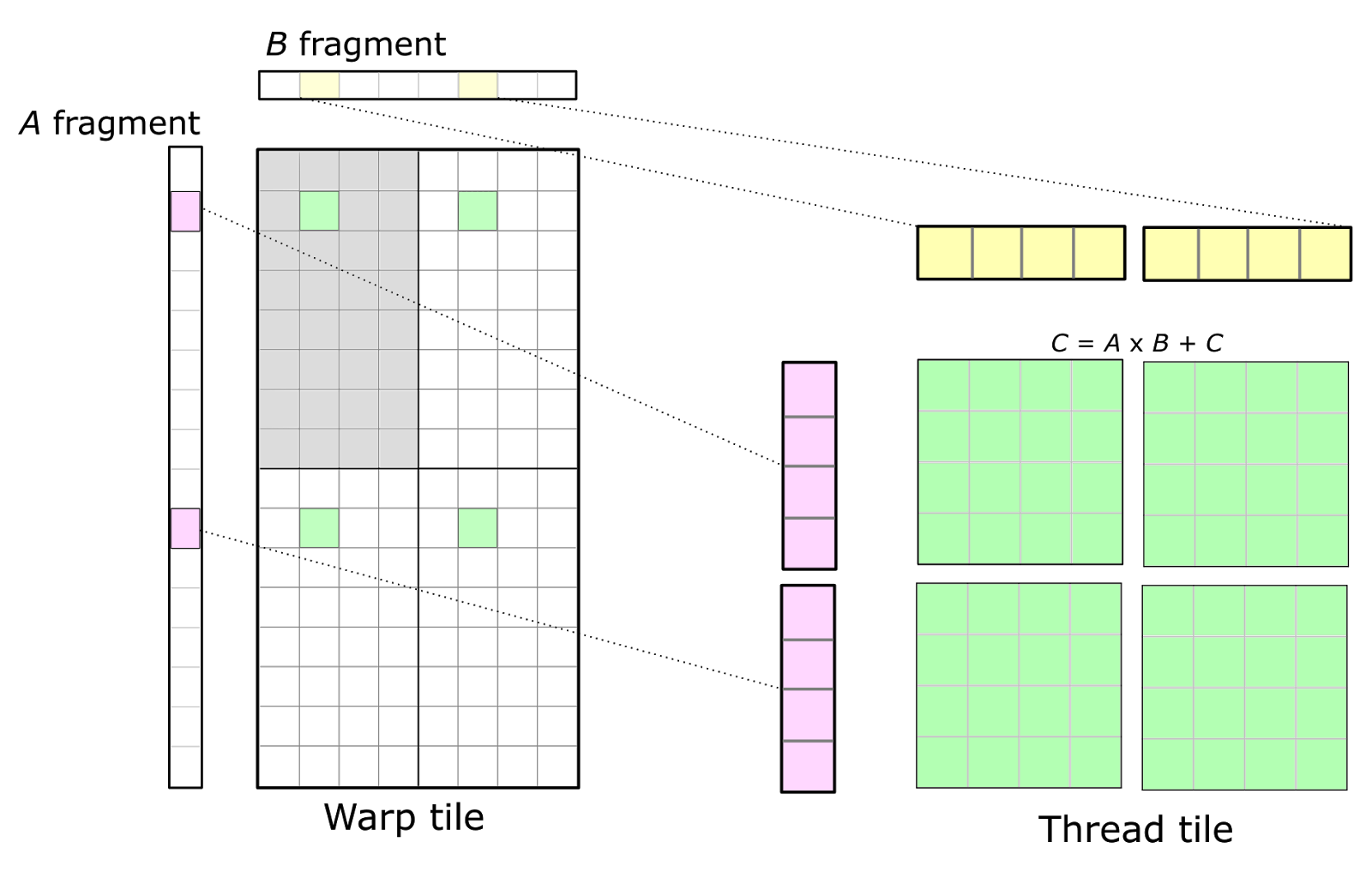

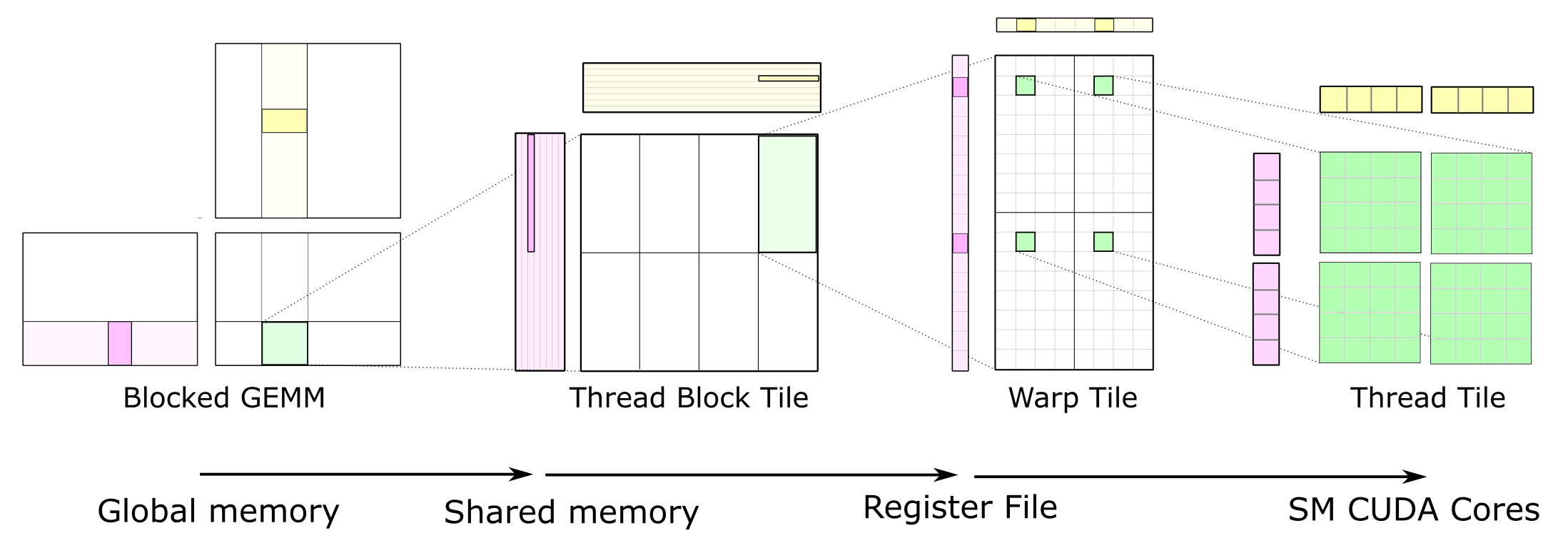

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Time elapsed on matrix multiplication of 1024x1024.

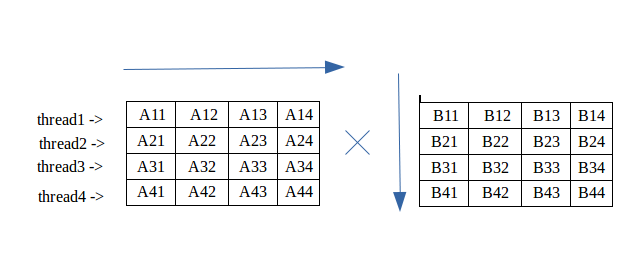

Cuda matrix multiplication non square. It is assumed that the student is familiar with C programming but no other background is assumed. Each thread within the block is responsible for. For example using a BLOCK_SIZE of 16 and two matrixes of 3200x3200 elements the results are correct.

Matrix multiplication between a IxJ matrix d_M and JxK matrix d_N produces a matrix d_P with dimensions IxK. Please type in m n and k. Specifically the kernel function and initializing the thread block and kernel grid dimensions.

May I ask how would you implement non-square matrix multiplication. During research I have found that square matrices are multiplied in shorter times. So for example I can multiply 3 6 6 3 using blocksize3 but I cant multiply.

2n2 data 2n2 flops These are examples of level 1 2 and 3 routines in Basic Linear Algebra Subroutines BLAS. A d_P element calculated by a thread is in blockIdxyblockDimythreadIdxy row and blockIdxxblockDimxthreadIdxx column. Each thread block is responsible for computing one square sub-matrix C sub of C.

The code I use for matrix multiplications in CUDA lets me multiply both square and non square matrices however both Width and Height MUST be multiples of blocksize. Example of Matrix Multiplication 61 Overview The task of computing the product C of two matrices A and B of dimensions wA hA and wB wA respectively is split among several threads in the following way. In this post I will show some of the performance gains achievable using shared memory.

In the CUDA examples if I use the sdk code its is valid for square matrixes. X block_size_x threadIdx. We like building things on level 3 BLAS routines.

Non Square Matrix Multiplication in CUDA. Writefile matmul_naivecu define WIDTH 4096 __global__ void matmul_kernel float C float A float B int x blockIdx. An Efficient Matrix Transpose in CUDA CC.

Execute the following cell to write our naive matrix multiplication kernel to a file name matmul_naivecu by pressing shiftenter. Y block_size_y threadIdx. One platform for doing so is NVIDIAs Compute Uni ed Device Architecture or CUDA.

Matrix multiplication in CUDA this is a toy program for learning CUDA some functions are reusable for other purposes. Anonymous 11 March 2014 at. My last CUDA C post covered the mechanics of using shared memory including static and dynamic allocation.

We use the example of Matrix Multiplication to introduce the basics of GPU computing in the CUDA environment. N2 data 2n2 flops 3Matrix-matrix multiply. Int y blockIdx.

For int k 0. For my GPU programming class weve been tasked with completing certain parts of a non-square matrix multiplication program. However whe using the same BLOCK_SIZE and matrixA3200x1600 and.

This paper focuses on matrix multiplication algorithm particularly square parallel matrix multiplication using Computer Unified Device Architecture CUDA programming model with C programming language. Test results following tests were carried out on a Tesla M2075 card lzhengchunclus10 liu aout. Matrix multiplication is under the list of time-consuming problems that require s huge computational resources to improve its speedup.

Algorithm handles all matrices as square matrix. CUDA C program for matrix Multiplication using Sharednon Shared memory Posted by Unknown at 0907 23 comments. Float sum 00.

CUDA Programming Guide Version 11 67 Chapter 6. For instance multiply a 4x3 by a 3x1 Thanks. Specifically I will optimize a matrix transpose to show how to use shared memory to reorder strided.

Hi everyone its the first time I post here but im having problems with matrix multiplication on non square matrixes. Non-square matrix multiplication in CUDA.

Parallel Matrix Multiplication Algorithm A Two Dimensional Blocked Download Scientific Diagram

Github Venkateshreddy74 Tiled Matrix Multiplication For Square And Non Square Matrices The Kernel Will Multiply A Matrix M By Another Matrix N Storing The Product Matrix P M N And P Will Not Necessarily Be Square Instead M Can Have

Multiplication Of Matrix Using Threads Geeksforgeeks

Parallel Matrix Multiplication C Parallel Processing By Roshan Alwis Tech Vision Medium

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Github Kberkay Cuda Matrix Multiplication Matrix Multiplication On Gpu Using Shared Memory Considering Coalescing And Bank Conflicts

Matrix Multiplication Cuda Embedded Computer Architecture Gpu Assignment 2016 2017

Multiplication Kernel An Overview Sciencedirect Topics

Diagram Of Matrix Multiplication Based On Cuda Download Scientific Diagram

Opencl Matrix Multiplication Sgemm Tutorial

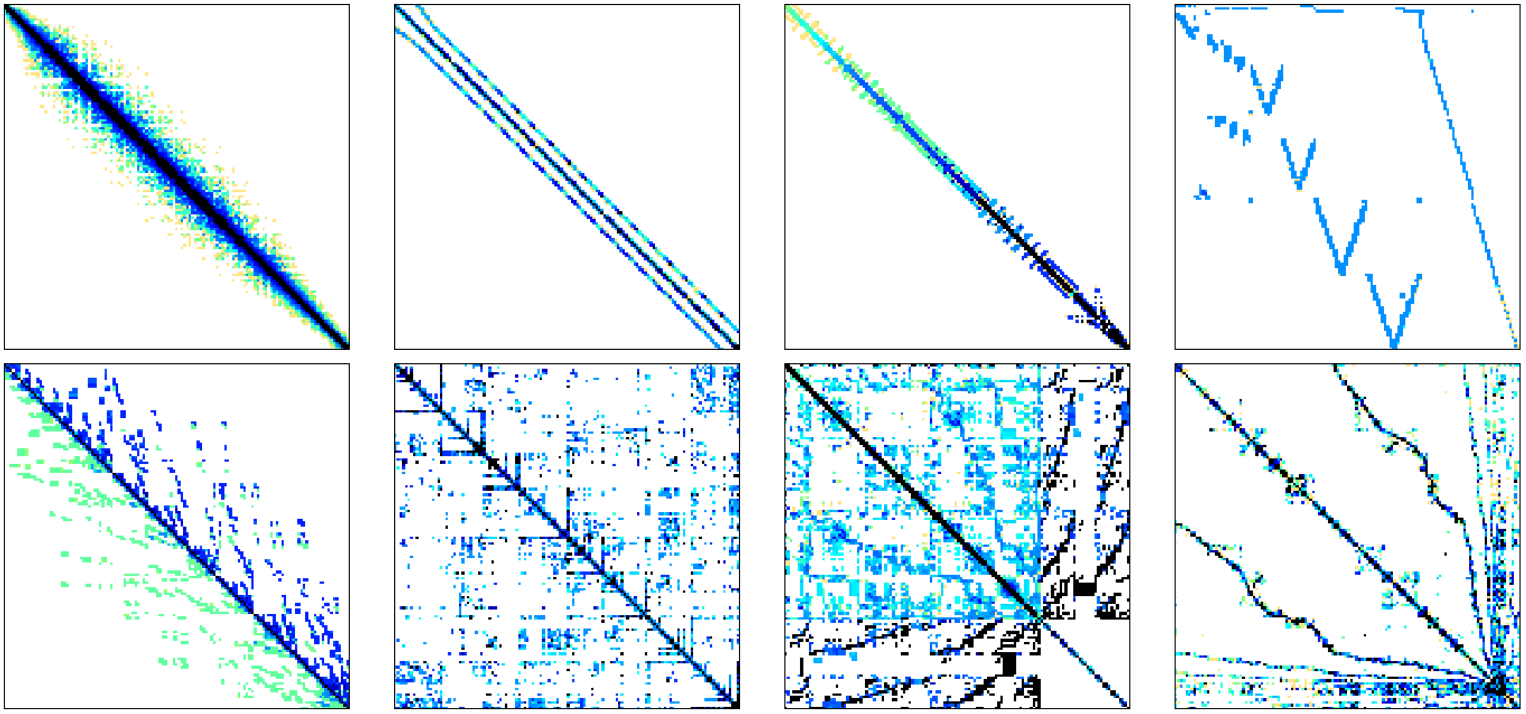

Sparse Matrix Vector Multiplication With Cuda By Georgii Evtushenko Analytics Vidhya Medium

Cannon S Matrix Multiplication Algorithm Download Scientific Diagram

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Sparse Matrix Multiplication On Two Pes Download Scientific Diagram