Matrix Multiplication Benchmark C++

Mind that the loop order is quite important for the multiplication performance. Routine which calculates the product of double precision matrices.

Unrolled By 2 Version Of Sparse Matrix Vector Multiply For Nas Cg Download Scientific Diagram

144-07 - it helps but very little.

Matrix multiplication benchmark c++. End toc100 2 Python performance timeit adotboutc. A matrix is a rectangular array of numbers that is arranged in the form of rows and columns. Result is as below.

B new floatmatrix_size matrix_size. The multiplyMatrix function implements a simple triple-nested for loop to multiply two matrices and store the results in the preallocated third matrix. For int i 0.

For an introduction to C AMP. Intel MKL provides several routines for multiplying matrices. A is an M -by- K matrix B is a K -by- N matrix and C is an M -by- N matrix.

For example you can perform this operation with the transpose or conjugate transpose of. Float A B C. For very large matrices Blaze and Intel R MKL are almost the same in speed.

A new floatmatrix_size matrix_size. To do so we are taking input from the user for row number column number first matrix elements and second matrix elements. J for int p 0.

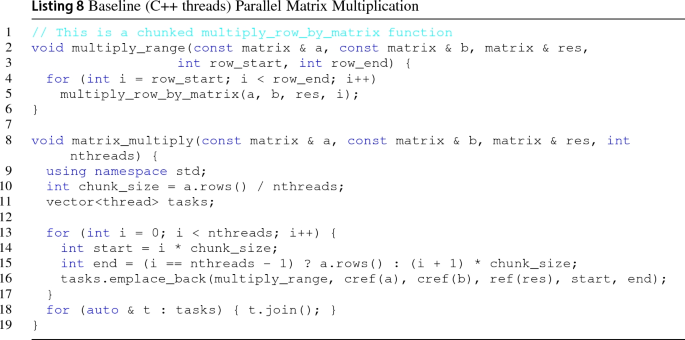

The Bitbucket repository also has a benchmark page where they also compare BLAS level 3 routines. I perform 3loop matrix multiplication with different 2darray definition pointer 2 pointertemplate and basic definition like float Asizesize and measure MFLOPS of matrix multiplication I also define 2d arrays with float Asizesize in C but interestingly the result was higher than c implementation. Browse other questions tagged c performance multithreading matrix or ask your own question.

Multiplying Matrices Using dgemm. Cblas_sgemmCblasRowMajor CblasNoTrans CblasNoTrans matrix_size matrix_size matrix_size 10 Amatrix_size B matrix_size 00 C matrix_size. 1 Matlab performance.

C new floatmatrix_size matrix_size. A 32 matrix has 3 rows and 2 columns as shown below. I matrix_size matrix_size.

GEMM computes C alpha A B beta C where A B and C are matrices. A program that performs matrix multiplication is. This version is 25 times faster but 25 times faster is unfortunately not enough for large matrix.

The most widely used is the. With numpy linked against mkl. Here I will try to extend this benchmark by creating a python module in c and calling that module from python.

I Ai rand. For example 8x8 matrix multiplication is a trivial calculation which should not have any threads created for it and on the other end of the spectrum a 1024x1024 matrix multiplication would create 1024 threads which is extremely excessive. Tic for i1100 cab.

P C i j A i p B p j. A good example for that is the Blaze library. C Program to Perform Matrix Multiplication.

An example of a matrix is as follows. That is why I believe that you actually can beat these BLAS implementations using C template libraries which make heavy use of SIMD and OpenMP for multithreading. Time 143 - 07 ms with 10 runs performed.

For simplicity let us assume scalars alphabeta1 in the following examples. The result matrix dimensions are taken from the first matrix rows and the second matrix columns. Efficient Matrix Multiplication on GPUs.

155 - 08. C m n A m k B k n for int i 0. Routine can perform several calculations.

I found in the blog of Martin Thoma a benchmark between python vs java vs c for matrix multiplication using the naive algorithm. Ill remind you that on my laptop machine the simple C AMP matrix multiplication yields a performance improvement of more than 40 times compared to the serial CPU code for MNW1024. Ive also installed mkl libraries for python.

Then we are performing multiplication on the matrices entered by the user. Matrix multiplication in C We can add subtract multiply and divide 2 matrices. The tiled solution that youre about to see with TS16 so 16x16 256 threads per tile is an additional two times.

I for int j 0.

Refactoring Grppi Generic Refactoring For Generic Parallelism In C Springerlink

Github Springer13 Tcl Tensor Contraction C Library

3 3 Sparse Linear Algebra Computational Statistics With R

Refactoring Grppi Generic Refactoring For Generic Parallelism In C Springerlink

Top 9 Machine Learning Frameworks In Julia

3 3 Sparse Linear Algebra Computational Statistics With R

Refactoring Grppi Generic Refactoring For Generic Parallelism In C Springerlink

Row Major Versus Column Major Layout Of Matrices Computational Science Stack Exchange

3 3 Sparse Linear Algebra Computational Statistics With R

Github Maxkotlan Cuda Matrix Multiplication Benchmark A Quick Benchmark Comparing The Difference Between Cpu Matrix Multiplication And Gpu Matrix Multiplication

Compiler Support For Critical Data Persistence In Nvm

Refactoring Grppi Generic Refactoring For Generic Parallelism In C Springerlink

Https Cug Org Proceedings Cug2019 Proceedings Includes Files Pap115s2 File1 Pdf

Github Attractivechaos Matmul Benchmarking Matrix Multiplication Implementations

Hpc Application Performance On Dell Poweredge R7525 Servers With Nvidia A100 Gpgpus Dell Technologies Info Hub

Github Corkymaigre Multithreading Benchmarks Multi Threading Performance Benchmark